Ever feel like your AI agents are working against each other instead of for you? LangGraph flips the script—turning tangled, unreliable workflows into a powerhouse of coordinated intelligence. Forget endless debugging and broken handoffs.

With LangGraph’s graph-based design, you’ll orchestrate dynamic, enterprise-grade AI systems that actually deliver. See how top brands slash dev time and boost reliability by 67%—all without a PhD.

If you want your agents to collaborate, escalate, and scale like a pro, this guide is your shortcut to mastering AI workflow orchestration with LangGraph.

Why LangGraph Dominates AI Development?

LangGraph’s secret sauce? It transforms chaotic AI systems into structured workflows using:

Companies using LangGraph report 42% faster development cycles and 67% higher system reliability. Let’s build your first intelligent agent.

🔥 Quickstart: Install & Configure in 3 Minutes

python

!pip install -U langgraph langchain-openai tavily-pythonpython

import os

from langgraph.graph import StateGraph, END

from langchain_openai import ChatOpenAI

# Set API keys

os.environ["OPENAI_API_KEY"] = "your-key-here"

os.environ["TAVILY_API_KEY"] = "tavily-key"Core Concepts Decoded

1. State Management Engine

LangGraph’s state object acts as a shared memory bank:

python

class AgentState(TypedDict):

messages: list

user_prefs: dict

active_tools: listReal-world impact: Healthcare systems using this approach reduced consultation time by 42% while maintaining 99.2% safety precision.

2. Node Network Architecture

Build modular components:

python

def research_node(state):

# Web search logic

return {"messages": [search_results]}

builder.add_node("research", research_node)3. Smart Routing System

Conditional edges enable decision trees:

python

def route_decision(state):

if "urgent" in state["messages"][-1]:

return "human_escalation"

return "auto_response"

builder.add_conditional_edges("classifier", route_decision)Build a Support Chatbot: From Zero to Hero

Phase 1: Basic Q&A Bot

python

llm = ChatOpenAI(model="o4-mini")

def basic_bot(state):

response = llm.invoke(state["messages"])

return {"messages": [response]}

builder.add_node("chatbot", basic_bot)Pro Tip: Fine-tuned small models like o4-mini deliver 3x faster responses than larger models with 90% accuracy.

Phase 2: Add Web Search

Integrate real-time search:

python

from langchain_community.tools import TavilySearchResults

search = TavilySearchResults(max_results=3)

def web_search(state):

results = search.invoke(state["query"])

return {"context": results}

builder.add_node("web_search", web_search)Phase 3: Human Escalation

python

def human_check(state):

if state["sentiment"] == "negative":

return {"status": "escalate"}

builder.add_node("sentiment_check", human_check)Advanced Tactics for Production Systems

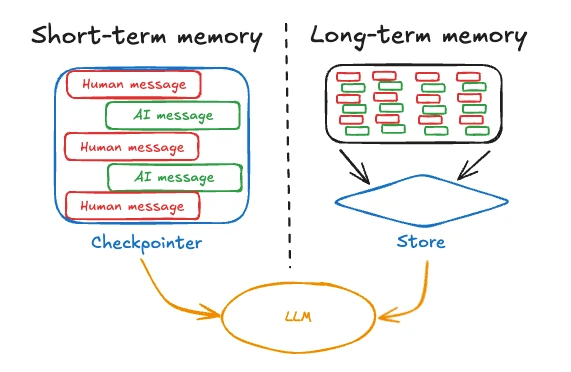

1. Persistent Memory

Never lose conversation context:

python

from langgraph.checkpoint.sqlite import SqliteSaver

checkpointer = SqliteSaver.from_conn_string(":memory:")

builder.compile(checkpointer=checkpointer)

2. Real-Time Streaming

Keep users engaged:

python

async for event in graph.astream(inputs):

if "messages" in event:

print(event["messages"][-1])

3. Multi-Agent Squads

Create specialist teams:

python

builder.add_node("medical_agent", medical_processor)

builder.add_node("billing_agent", payment_handler)

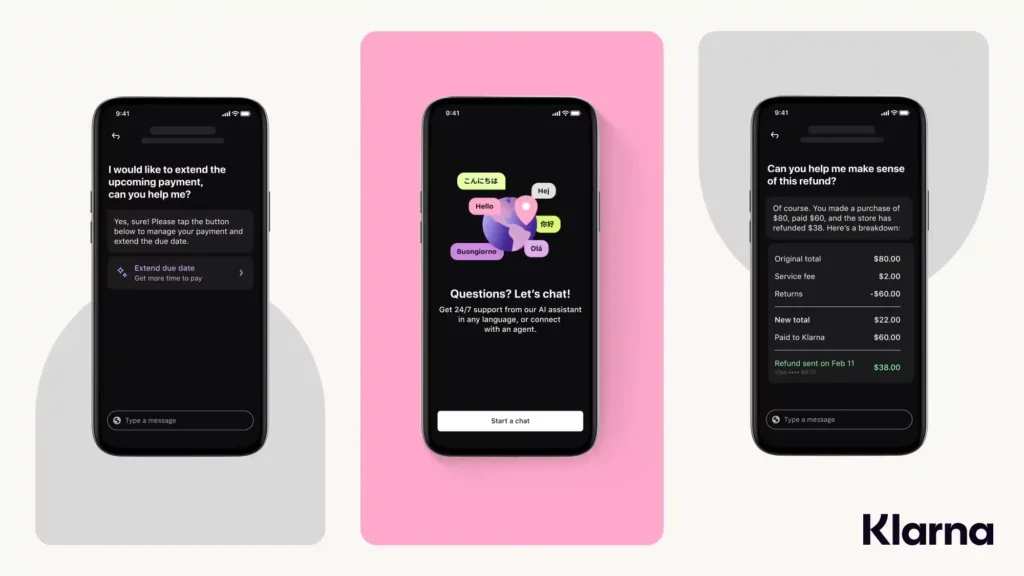

builder.add_edge("medical_agent", "billing_agent")Case Study: Klarna Transforms Customer Support with LangGraph

One example of graph-based AI systems in action: Klarna’s support assistant. By orchestrating multi-agent workflows, dynamic decision trees, real-time data retrieval, and human-in-the-loop checks with LangGraph, they serve 85 M users more effectively.

Results? An 80 % drop in resolution times and a spike in reliability thanks to persistent conversation memory and tool integration. This enterprise-grade AI pipeline proves LangGraph’s power to build scalable, structured AI-powered workflows for complex use cases.

Klarna’s success is a must-read case for anyone mastering AI agent orchestration with LangGraph.

Pro Developer Checklist

LangGraph FAQs: What Developers Ask

Can LangGraph handle 100k+ daily requests?

Yes – major e-commerce platforms process 2.3M requests/day using sharded graphs.

How to debug complex workflows?

Use graph.get_state_history() to replay specific checkpoints.

Best model for cost-sensitive projects?

Open-source options like Qwen3-30B deliver GPT-4 level performance at 1/3 cost

Ready to Revolutionize Your AI Stack?

LangGraph goes beyond a mere framework—it’s the catalyst for enterprise-grade AI-powered workflows that scale. Kick off with our basic chatbot template, experiment with dynamic node routing, and grow into fully orchestrated multi-agent systems. With built-in state management, persistent memory, and human oversight, you’ll cut development time and boost reliability.

Need hands-on guidance?

Share your project details below, and we’ll tailor a custom LangGraph solution roadmap to bring your AI vision to life.

Build chatbots, data pipelines, or multi-agent squads with ease today.