Alibaba's Qwen3 has taken the AI world by storm, releasing eight powerful models that outperform many industry leaders in standard benchmarks.

The flagship Qwen3-235B-A22B model has surpassed competitors like DeepSeek-R1, OpenAI's o1, and even Gemini 2.5-Pro, while the compact Qwen3-30B-A3B achieves remarkable efficiency with just 3B active parameters.

This technical guide will show you exactly how to harness these powerful models to build sophisticated AI agents and RAG systems that deliver exceptional performance.

Understanding Qwen3's Architecture and Capabilities

Qwen3 introduces several architectural innovations that make it particularly well-suited for agent-based applications:

Model Architecture Breakdown:

| Model | Total Parameters | Active Parameters | Architecture | Context Length |

|---|---|---|---|---|

| Qwen3-235B-A22B | 235 Billion | 22 Billion | MoE (94 layers) | 128K tokens |

| Qwen3-30B-A3B | 30 Billion | 3 Billion | MoE (48 layers) | 128K tokens |

| Qwen3-32B | 32 Billion | 32 Billion | Dense (64 layers) | 128K tokens |

| Qwen3-14B | 14 Billion | 14 Billion | Dense (40 layers) | 128K tokens |

| Qwen3-8B | 8 Billion | 8 Billion | Dense (36 layers) | 128K tokens |

| Qwen3-4B | 4 Billion | 4 Billion | Dense (36 layers) | 32K tokens |

| Qwen3-1.7B | 1.7 Billion | 1.7 Billion | Dense (28 layers) | 32K tokens |

| Qwen3-0.6B | 0.6 Billion | 0.6 Billion | Dense (28 layers) | 32K tokens |

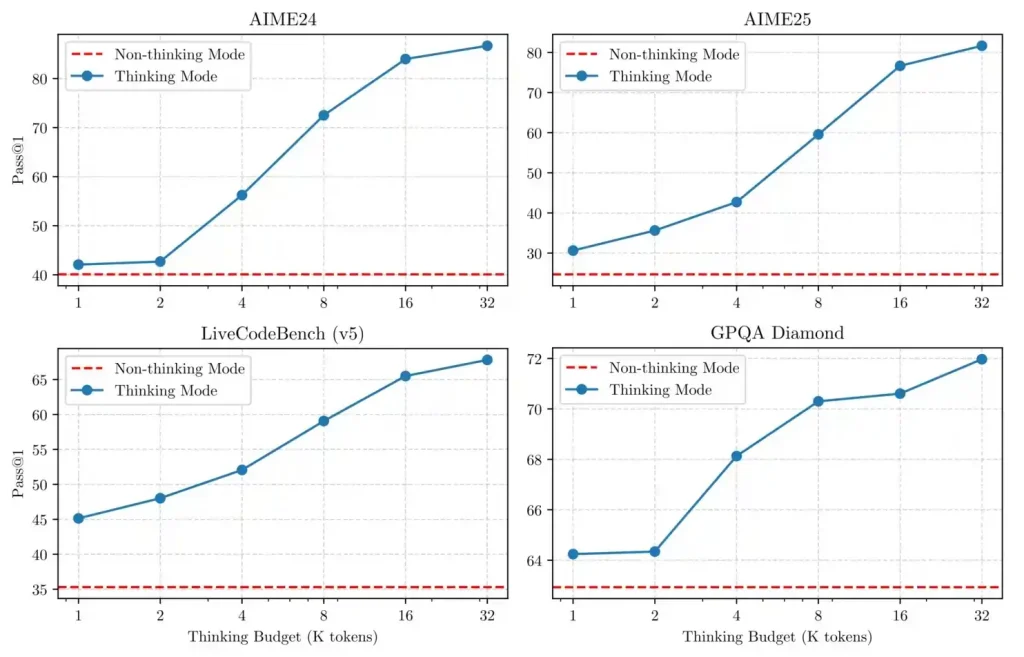

What makes Qwen3 particularly powerful for agent development is its hybrid thinking approach. The model can dynamically switch between:

This dual-mode capability allows Qwen3 to excel at agent tasks requiring both quick responses and deep reasoning. The models also feature enhanced MCP (Model Context Protocol) implementation, making tool use and function calling significantly more reliable than previous generations.

Setting Up Your Development Environment

Before building our agents, let's establish the proper environment:

python

# Install necessary packages

!pip install langchain langchain-community openai duckduckgo-search chromadb sentence-transformers

# Import key components

from langchain.chat_models import ChatOpenAI

from langchain.agents import Tool, initialize_agent

from langchain.tools import DuckDuckGoSearchRun

from langchain_community.document_loaders import TextLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain_community.vectorstores import Chroma

from langchain.embeddings import HuggingFaceEmbeddings

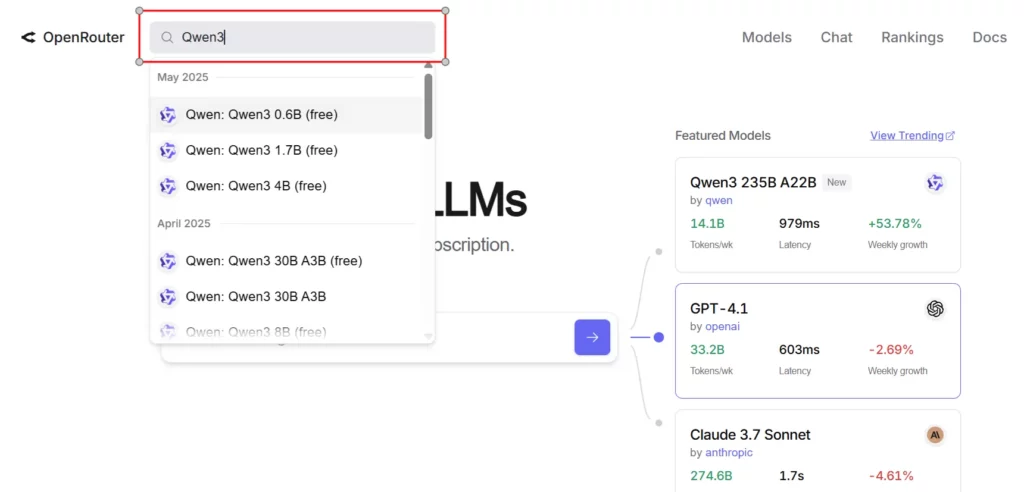

from langchain.chains import RetrievalQAFor this tutorial, we'll be accessing Qwen3 through the OpenRouter API, which provides convenient access to these models:

python

# Initialize Qwen3 LLM

llm = ChatOpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="YOUR_API_KEY", # Replace with your actual API key

model="qwen/qwen3-235b-a22b:free" # You can also use other Qwen3 variants

)Building an Advanced Travel Agent with Qwen3

Let's create a sophisticated travel planning agent that combines multiple tools and leverages Qwen3's reasoning capabilities:

1. Define Specialized Tools

First, we'll create several tools our agent can use:

python

# Web search tool for updated information

search = DuckDuckGoSearchRun()

# Destination recommendation tool

def get_destinations(destination):

return search.run(f"Top tourist attractions in {destination} with visiting hours and entrance fees")

destination_tool = Tool(

name="Destination_Recommender",

func=get_destinations,

description="Finds detailed information about top places to visit in a specific city or location"

)

# Currency conversion tool

def convert_currency(query):

try:

# Parse the query to extract amount, source and target currency

parts = query.split()

amount = float([s for s in parts if s.replace('.', '', 1).isdigit()][0])

source_currency = None

target_currency = None

for i, part in enumerate(parts):

if part.upper() in ["USD", "EUR", "GBP", "INR", "JPY", "CAD", "AUD"]:

if source_currency is None:

source_currency = part.upper()

else:

target_currency = part.upper()

# Simplified static conversion rates (in real app, use an API)

rates = {

"USD": {"EUR": 0.92, "GBP": 0.78, "INR": 83.2, "JPY": 154.5, "CAD": 1.36, "AUD": 1.51},

"EUR": {"USD": 1.09, "GBP": 0.85, "INR": 90.4, "JPY": 167.9, "CAD": 1.48, "AUD": 1.64},

# Add other currency pairs as needed

}

if source_currency and target_currency and source_currency in rates and target_currency in rates[source_currency]:

converted = amount * rates[source_currency][target_currency]

return f"{amount} {source_currency} = {converted:.2f} {target_currency}"

else:

return "Conversion not available. Please specify currencies like 'USD to EUR' or 'INR to JPY'."

except:

return "Couldn't parse the conversion request. Format: 'convert 100 USD to EUR'"

currency_tool = Tool(

name="Currency_Converter",

func=convert_currency,

description="Converts between different currencies using current exchange rates"

)

# Weather information tool

def get_weather(location):

return search.run(f"Current weather and 3-day forecast for {location}")

weather_tool = Tool(

name="Weather_Forecaster",

func=get_weather,

description="Provides current weather and forecast for a location"

)2. Initialize the Advanced Agent

Now we'll create our agent with these tools:

python

# Combine all tools

tools = [destination_tool, currency_tool, weather_tool]

# Initialize the agent with Qwen3

travel_agent = initialize_agent(

tools=tools,

llm=llm,

agent_type="zero-shot-react-description",

verbose=True,

handle_parsing_errors=True,

max_iterations=6

)3. Implement a Comprehensive Travel Planner

Let's create a more robust travel planning function:

python

def comprehensive_trip_planner(city, duration_days, budget, currency="USD", interests=None):

"""

Creates a comprehensive travel plan

Args:

city: Destination city

duration_days: Number of days for the trip

budget: Budget amount

currency: Currency of the budget (default USD)

interests: Specific interests (e.g., "museums, hiking, food")

"""

# Craft a detailed query for the agent

interests_str = f" with focus on {interests}" if interests else ""

query = (

f"Create a detailed {duration_days}-day itinerary for {city}{interests_str}. "

f"The total budget is {budget} {currency}. Include: "

f"1. Top attractions with estimated time needed and entrance fees "

f"2. Weather forecast to help with planning "

f"3. Currency conversion of the budget to local currency "

f"4. Recommended local food and transportation options "

f"5. Estimated daily expenses breakdown"

)

# Run the agent

response = travel_agent.run(query)

return response4. Test the Agent's Performance

python

# Example usage

itinerary = comprehensive_trip_planner(

city="Kyoto",

duration_days=3,

budget=1500,

currency="USD",

interests="traditional culture, temples, and local cuisine"

)

print(itinerary)Building a High-Performance RAG System with Qwen3

Qwen3's hybrid reasoning capabilities make it ideal for sophisticated RAG systems. Here's how to build an advanced implementation:

1. Document Processing and Embedding

python

# Load and process documents

loader = TextLoader("path_to_your_knowledge_base.txt")

documents = loader.load()

# Create semantically meaningful chunks

text_splitter = CharacterTextSplitter(

separator="\n\n",

chunk_size=500,

chunk_overlap=100,

length_function=len

)

document_chunks = text_splitter.split_documents(documents)

# Use high-quality embeddings

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-mpnet-base-v2")

# Create vector store with metadata filtering capability

vectorstore = Chroma.from_documents(

documents=document_chunks,

embedding=embeddings,

collection_name="qwen3_kb",

persist_directory="./chroma_db"

)2. Building an Advanced RAG Chain

python

# Configure an optimized retriever

retriever = vectorstore.as_retriever(

search_type="mmr", # Maximum Marginal Relevance for diversity

search_kwargs={

"k": 5, # Retrieve more documents for better context

"fetch_k": 20, # Consider more documents before filtering

"lambda_mult": 0.7 # Balance between relevance and diversity

}

)

# Create a more sophisticated RAG chain with Qwen3

from langchain.prompts import PromptTemplate

# Custom RAG prompt template

rag_prompt_template = """You are an expert AI assistant powered by Qwen3. Use ONLY the following context to answer the question. If you don't know the answer based on the context, say you don't know rather than making up information.

Context information:

{context}

Question: {question}

Think through this step-by-step, considering all relevant information in the context, and provide a detailed, accurate answer:"""

PROMPT = PromptTemplate(

template=rag_prompt_template,

input_variables=["context", "question"]

)

# Create the RAG chain with the customized prompt

from langchain.chains import RetrievalQA

rag_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": PROMPT}

)3. Implementing Advanced RAG Query Processing

To make our RAG system even more powerful, let's add query transformation:

python

def enhanced_rag_query(user_query):

"""Process a user query with advanced RAG techniques"""

# Step 1: Query improvement with Qwen3

query_improvement_prompt = f"Transform the following user question into a more detailed search query that will help retrieve relevant information from a knowledge base. Original question: '{user_query}'"

improved_query = llm.predict(query_improvement_prompt)

# Step 2: Retrieve information with the improved query

raw_result = rag_chain({"query": improved_query})

# Step 3: Generate the final response

answer = raw_result["result"]

sources = [doc.page_content for doc in raw_result["source_documents"]]

return {

"original_query": user_query,

"improved_query": improved_query,

"answer": answer,

"sources": sources

}Activating Qwen3's Thinking Mode for Complex Reasoning

Qwen3's unique thinking mode can be activated for complex queries, significantly improving reasoning quality:

python

# Enabling thinking mode with the OpenRouter API

llm_with_thinking = ChatOpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="YOUR_API_KEY",

model="qwen/qwen3-235b-a22b:free",

model_kwargs={

"enable_thinking": True, # Activate thinking mode

"thinking_budget": 0.7 # Control thinking depth (0-1)

}

)

# Using thinking mode for complex analysis

def analyze_with_thinking(complex_query):

"""Uses Qwen3's thinking mode for in-depth analysis"""

result = llm_with_thinking.predict(

f"Analyze this thoroughly: {complex_query}"

)

return resultBuilding Agentic RAG with Tool Usage

Combining RAG with tool-using capabilities creates a more powerful system:

python

from langchain.agents import Tool, AgentExecutor, LLMSingleActionAgent

from langchain.prompts import StringPromptTemplate

from langchain.chains import LLMChain

from langchain.agents import ZeroShotAgent, AgentExecutor

from typing import List, Union, Dict

# Define a RAG tool

rag_tool = Tool(

name="KnowledgeBase",

func=lambda q: rag_chain({"query": q})["result"],

description="Useful for finding information about specific topics in the knowledge base"

)

# Create combined tools list

agentic_tools = [rag_tool, weather_tool, search]

# Define the agent

agent_prompt = ZeroShotAgent.create_prompt(

agentic_tools,

prefix="You are a helpful AI assistant with access to various tools.",

suffix="Answer the user's question to the best of your ability using the tools provided.",

input_variables=["input", "agent_scratchpad"]

)

llm_chain = LLMChain(llm=llm, prompt=agent_prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=agentic_tools, verbose=True)

agentic_rag = AgentExecutor.from_agent_and_tools(

agent=agent,

tools=agentic_tools,

verbose=True,

max_iterations=5

)Performance Optimization and Best Practices

To get the most out of Qwen3 for your AI agents and RAG systems:

Conclusion: Qwen3- The Multilingual Powerhouse for AI Development

Forget struggling with clunky, one-dimensional language models. Qwen3 has changed the game with its hybrid thinking system that switches between deep reasoning and quick responses on demand. While other models fumble with complex tasks, Qwen3 handles everything from multi-step travel planning to sophisticated RAG systems with remarkable precision.

The 119-language support isn't just a bullet point-it's a global development opportunity. Whether you're building specialized agents, knowledge retrieval systems, or tool-using assistants, Qwen3's architecture delivers enterprise-grade results without enterprise-level resources.

The future of AI development isn't just about more parameters-it's about smarter architecture. And Qwen3 is leading that revolution.