Are you ready to harness the power of state-of-the-art AI without being tied to costly proprietary systems? The world of open-source Large Language Models (LLMs) is experiencing a surge in innovation, providing developers and businesses with unprecedented control and flexibility.

From coding specialists to multilingual powerhouses, these models provide the tools to build truly custom solutions.

This guide cuts through the noise, breaking down the 6 best open-source LLMs available today. We will compare their unique strengths, performance benchmarks, and ideal use cases to help you select the perfect model for your next groundbreaking project.

Why Open Source LLMs Are Redefining AI Development?

Developers spent $2.1 billion on proprietary AI APIs in 2026—money they didn't need to spend. Open source LLMs are fundamentally redefining AI development by democratizing access to powerful generative technology. They provide a critical alternative to proprietary systems, empowering developers and organizations with greater control and flexibility.

A primary advantage is enhanced data privacy and security, as these models can be self-hosted on private infrastructure, ensuring sensitive information never leaves the organization's control. This addresses major compliance and data sovereignty concerns.

Furthermore, open source LLMs eliminate vendor lock-in and the unpredictable, often high costs of pay-per-token API calls associated with closed-source models. This cost-effectiveness allows for scalable deployment without prohibitive licensing fees.

Finally, their inherent transparency is a catalyst for innovation; with full access to the model’s architecture and code, developers can perform detailed audits, fix bugs, and customize the model for highly specialized, domain-specific tasks—a level of customization not possible with black-box proprietary solutions.

This open, collaborative environment accelerates progress and fosters a more robust AI ecosystem.

Smarter AI Development with Leading Open Source LLMs

| Open Source LLMs | Parameter Size | License |

|---|---|---|

| Llama 3 | 8B to 405B | Permissive License |

| Mixtral 8x7B | 12.9B active | Apache 2.0 |

| Gemma | Lightweight, optimised | Commercial Use |

| Qwen | Multiple sizes | Permissive License |

| DeepSeek-V3 | 671B total | Open Source |

| Yi-34B | 34 Billion | Permissive License |

1. Meta Llama 3

Meta Llama 3 represents the pinnacle of openly available large language models, setting a new standard for performance and versatility. As the next generation in the Llama family, these models are designed to compete with the best proprietary AI systems available today.

They demonstrate state-of-the-art results across a wide range of industry benchmarks, showing significant gains in reasoning, code generation, and instruction following.

Available in 8B, 70B, and a massive 405B parameter version, Llama 3 offers unparalleled scalability for developers and enterprises. This collection of pre-trained and instruction-tuned models supports a broad spectrum of use cases, from simple chatbots to complex AI applications, making it one of the best open source LLMs for driving innovation.

Uses and Applications:

Standout Features:

👉 Best For: Developers and businesses are seeking a powerful, scalable, and versatile foundation model.

User Testimonial: “It's wildly good; it made a perfect snake game very easily”.

Why Choose Llama 3 for Students?

Llama 3 lets students build with a top-tier model, offering hands-on experience with advanced AI that mirrors professional-grade systems.

2. Mixtral 8x7B

From the creators at Mistral AI, Mixtral 8x7B introduces a groundbreaking Sparse Mixture-of-Experts (SMoE) architecture. This efficient LLM design delivers the power of a 46.7B parameter model while only using 12.9B parameters per token, resulting in up to 6x faster inference speeds compared to models like Llama 2 70B .

This approach provides an outstanding cost-performance ratio, matching or exceeding GPT-3.5 on most standard benchmarks . With strong multilingual capabilities in English, French, German, Spanish, and Italian, and an Apache 2.0 license, Mixtral is built for high-throughput, global applications.

Uses and Applications:

Standout Features:

👉 Best for: Startups and enterprises seeking elite performance without high computational costs.

User Testimonial: “The speed and efficiency of Mixtral are game-changing for our platform.”

Why Choose Mixtral for Students?

Mixtral's efficient design lets students run powerful AI models on less powerful hardware, making advanced AI more accessible.

3. Google Gemma

Gemma is Google's family of lightweight, state-of-the-art open models, built from the same research and technology used to create the powerful Gemini models. This initiative is a significant leap forward, designed to make advanced AI more accessible to developers and researchers worldwide. Gemma models are engineered for responsible AI development and offer exceptional performance for their size.

With versions optimised for both high-power servers and on-device applications, Gemma provides remarkable flexibility. The latest Gemma 3 series even introduces multimodal capabilities, supporting both text and image inputs, a 128k context window, and multilingual support for over 140 languages, solidifying its position among the top open source AI models.

Uses and Applications:

Standout Features:

👉 Best For: Researchers and developers who need efficient, powerful, and responsible AI models.

User Testimonial: “Gemma excels on mathematical reasoning tests, outperforming many larger models”.

Why Choose Gemma for Students?

Gemma gives students a direct line to Google's cutting-edge AI research, perfect for running powerful models on personal laptops for study.

4. Alibaba Qwen

The Qwen series from Alibaba Cloud is a family of powerful large language models with a strong focus on multilingual capabilities and advanced tool use. Initially trained on a massive multilingual dataset with an emphasis on Chinese and English, Qwen models are designed to function as highly capable AI agents from their inception.

The series has rapidly evolved, with Qwen3-Max becoming Alibaba's first trillion-parameter model, showcasing an ultra-long context window of 262,144 tokens. Qwen models consistently demonstrate impressive performance in selecting and using tools, making them a top choice for building complex, automated workflows and intelligent systems that can interact with external APIs.

Uses and Applications:

Standout Features:

👉 Best For: Enterprises require robust multilingual support and advanced AI agent functionality.

User Testimonial: “It correctly selected and used external tools with over 95% accuracy”.

Why Choose Qwen for Students?

Qwen empowers students to explore building next-generation AI agents, giving them a unique and valuable skill set for the future job market.

5. DeepSeek-V3

DeepSeek-V3 has established itself as the pinnacle of open-source AI, particularly for developers and technical users. This Mixture-of-Experts (MoE) model, containing 671 billion parameters, consistently outperforms other open-source models in demanding benchmarks for coding, math, and reasoning, even rivaling top closed-source models like GPT-4o.

What makes DeepSeek-V3 truly remarkable is its incredible training efficiency; it was developed at a fraction of the cost of its competitors. This combination of elite performance and cost-effectiveness makes it an ideal choice for organisations looking to deploy top-tier AI without the massive expense, solidifying its reputation as the best open-source AI model for technical tasks.

Uses and Applications:

Standout Features:

👉 Best For: Coders, engineers, and researchers need best-in-class technical reasoning capabilities.

User Testimonial: “DeepSeek-V3 decisively outperforms competitors like Llama 3.1 405B on benchmarks”.

Why Choose DeepSeek-V3 for Students?

DeepSeek-V3 is the ultimate tool for computer science students, offering unparalleled coding assistance to accelerate projects and deepen technical understanding.

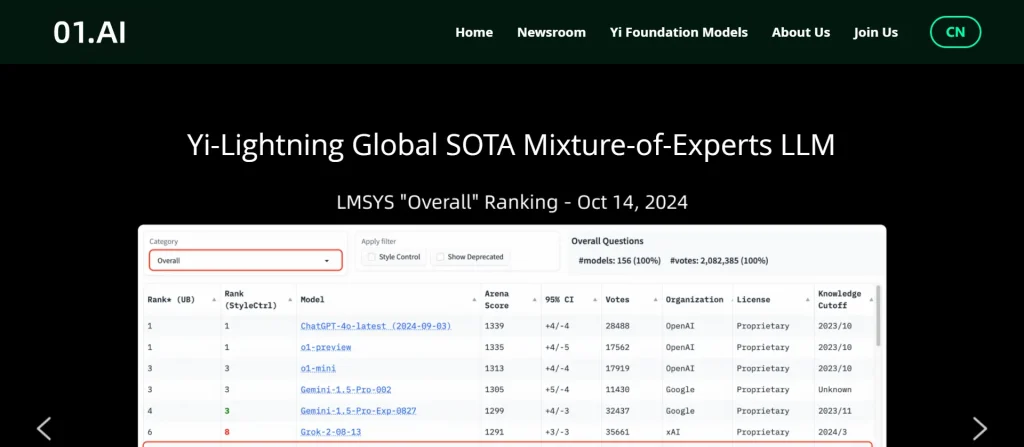

6. 01.AI Yi-34B

The Yi-34B model from 01.AI is a powerful bilingual language model that strikes an impressive balance between high performance and computational efficiency. Architecturally similar to Llama, it was trained from scratch on over 3 trillion tokens of English and Chinese data, demonstrating exceptional capabilities in language understanding and reasoning.

Yi-34B's key advantage is its ability to rival the performance of much larger models like LLaMA-2 70B while running on high-end consumer-grade GPUs. This accessibility, combined with its strong benchmark results and a vision-language extension (Yi-VL), makes it a leading choice for developers who need powerful AI without requiring enterprise-level infrastructure.

Uses and Applications:

Standout Features:

👉 Best For: Developers needing strong bilingual performance without access to enterprise hardware.

User Testimonial: “The Yi-34B model matches the performance of GPT-3.5 on consumer devices”.

Why Choose Yi-34B for Students?

Yi-34B allows students to experiment with high-performance, bilingual AI on their own computers, lowering the barrier to entry for AI development.

The Future of Open Source LLMs | What to Expect Next

The future of open source LLMs is trending towards smaller, more efficient, and highly specialized models. Instead of relying solely on massive, general-purpose models, the community is focusing on creating compact yet powerful LLMs optimized for specific tasks like code generation or running on edge devices.

This will make advanced AI more accessible and computationally feasible for a wider range of applications. We can expect continued innovation in model efficiency through techniques like quantization, which reduces model size without significantly impacting performance.

Collaboration between large enterprises and research institutions is also likely to increase, leading to the development of powerful new foundational models built on shared expertise. Another key trend is the growth of multimodality, where open source models will become increasingly proficient at understanding and generating content from multiple data types, including text, images, and audio.

Finally, there will be a heightened focus on developing robust frameworks for AI safety, ethics, and bias mitigation within the open-source community to ensure responsible deployment.

Recommended Readings:

Your Next Move

In wrapping up, it is clear that the open-source industry is rich with powerful and diverse large language models.

From models excelling at code and mathematics to those offering unparalleled efficiency or broad multilingual support, there is a solution for every need.

These tools empower developers, researchers, and enterprises to innovate without the constraints of proprietary systems.

For anyone looking to build the next generation of AI applications, your journey starts here. Explore these state-of-the-art models and begin creating today.