Tired of struggling with basic setups when creating AI apps? Grab these must-know tools for building AI apps that boost efficiency and spark innovation. From streamlining workflows to mastering machine learning integrations.

Find how AI app development tools can supercharge your projects, slash development time, and deliver apps that stand out in the crowded tech space.

This guide packs essential strategies for developers and enthusiasts eyeing top results.

The Current State of AI Development Tools Market

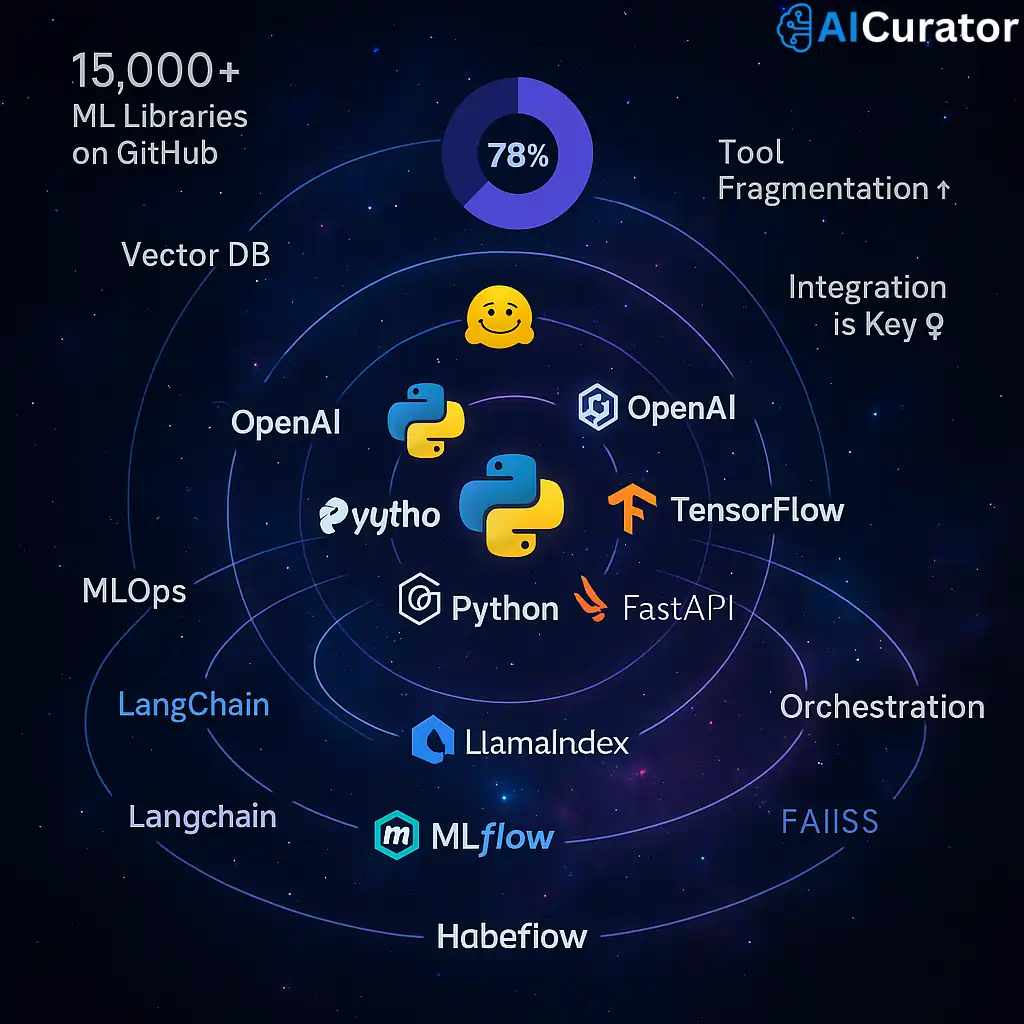

The AI development tools ecosystem has grown exponentially, with over 15,000 machine learning frameworks and libraries available across GitHub repositories. Recent surveys show that 78% of developers now use multiple AI development frameworks simultaneously, creating complex but powerful tech stacks.

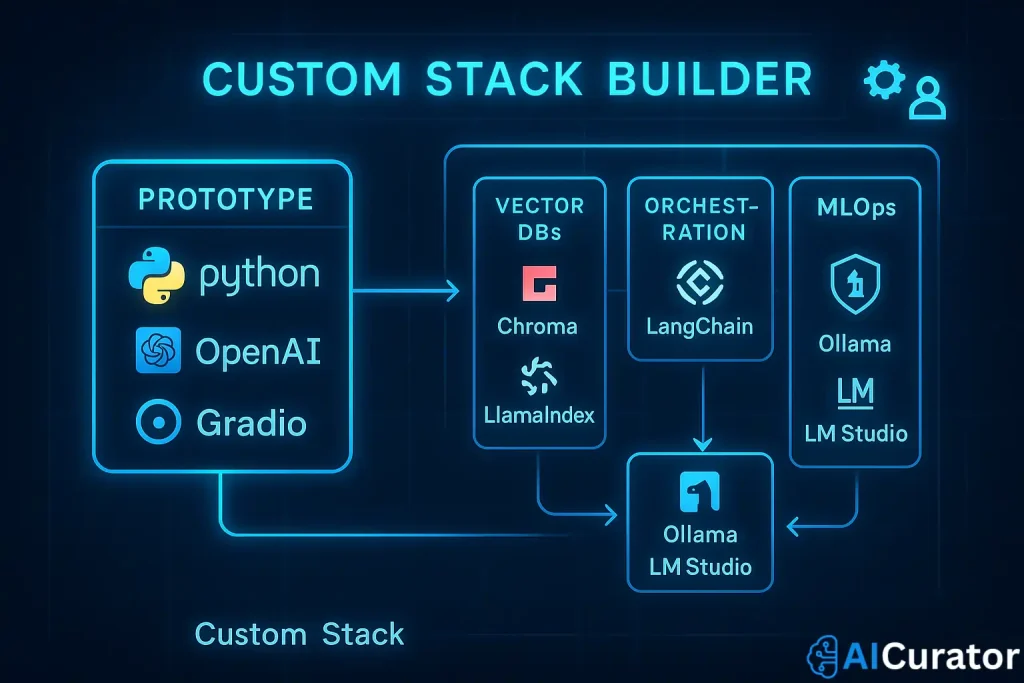

Popular combinations include Python-based LLM integration paired with vector databases for RAG systems, whilst MLOps platforms handle deployment automation. This fragmented landscape means selecting the right AI workflow automation tools becomes crucial for project success.

Modern AI agents require sophisticated orchestration, making tool compatibility and seamless integration non-negotiable factors for serious developers building production-ready applications.

AI Development Tools Comparison for App Builders

| Category | Tool/Platform | Pricing Model |

| Programming Language | Python | Free and open-source, with optional paid extensions via cloud services like AWS or Google Cloud |

| TypeScript | Free and open-source, no direct costs; integrates with paid JS ecosystems if needed | |

| Language Models & APIs | Gemini 2.5 Pro | Pay-per-use API: around $0.002 per 1K input tokens, free tier for testing |

| OpenAI GPT-4o/3.5 | Tiered: GPT-4o at $2 per 1M input tokens, GPT-3.5 at $2per 1M; free playground | |

| Self-Hosting LLMs | Ollama | Completely free, open-source; no subscriptions |

| LM Studio | Free for core use; optional donations or premium features in future updates | |

| Orchestration Framework | LangChain | Free open-source; enterprise plans start at $39/month for advanced support |

| LlamaIndex | Free core version; paid tiers for enterprise scaling from $50/month | |

| Vector DB & Retrieval | Chroma | Free open-source; cloud-hosted options from $20/month |

| Weaviate | Free open-source; enterprise cloud plans from $25/month per instance | |

| UI Development | Gradio | Free and open-source; Hugging Face hosting adds no extra cost |

| Dash (Plotly) | Free open-source; enterprise edition from $495/year for teams | |

| MLOps & Deployment | Airflow | Free Apache project; managed services like Astronomer start at $100/month with pay-as-you-go feature |

| SageMaker (AWS) | Pay-as-you-go: training from $0.10/hour, inference varies; free tier for basics |

1. Programming Languages

Programming languages form the foundation of any AI solution, powering data ingestion, neural network training, and API integration. Strong language support streamlines coding, improves reproducibility, and enables fast deployment of intelligent features essential for developing high-performance AI applications.

1.1: Python

AI app development often kicks off with Python, thanks to its rich machine learning libraries and readable syntax. It’s the go-to for prototyping, data wrangling, and deploying models with frameworks like TensorFlow, PyTorch, and scikit-learn.

Python’s popularity means you’ll find endless tutorials, pre-trained models, and a buzzing AI community—making it a must-have for any AI toolkit.

Quick demo: “To show how painless a Retrieval-Augmented Generation (RAG) prototype can be in Python, here’s a five-line LangChain + Chroma example:”

python

from langchain.chat_models import ChatOpenAI

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain.chains import RetrievalQA

# 1. Create vector store

db = Chroma(embedding_function=OpenAIEmbeddings())

# 2. Add sample document

db.add_texts(["LangChain makes RAG pipelines easy."])

# 3. Build chatbot

qa_chain = RetrievalQA.from_chain_type(

llm=ChatOpenAI(model_name="gpt-3.5-turbo"),

retriever=db.as_retriever()

)

print(qa_chain("What does LangChain do?"))Key Parameters:

Best For

Machine learning workflows, data analysis, AI research

1.2: TypeScript

TypeScript brings static typing to JavaScript, giving devs confidence in building robust AI-powered web apps. It’s a favourite for frontends and serverless APIs, especially when integrating with LLM APIs or visualising AI outputs.

TypeScript’s type safety and tooling speed up debugging and help scale AI projects with fewer bugs.

Key Parameters:

Best For

Full-stack AI applications, API integrations, web interfaces

2. Language Models & APIs

Language models and APIs drive core reasoning, content creation, and natural language understanding in AI apps. These APIs provide direct access to pre-trained, state-of-the-art AI engines, letting developers integrate tasks like summarisation, translation, and chat seamlessly, whilst reducing time-to-market for machine learning projects.

2.1: Gemini 2.5 Pro

Google’s Gemini 2.5 Pro stands out with a staggering 1M+ token context window and true multimodal input—text, code, images, audio, even video. It’s built for “step-by-step thinking,” excelling in code generation, document analysis, and complex reasoning.

Developers can feed in entire codebases or long research papers and get coherent, context-aware responses.

Key Parameters:

Best For

Complex analysis, coding assistance, document processing

2.2: OpenAI GPT-4o / GPT-3.5

OpenAI’s GPT-4o and GPT-3.5 power everything from chatbots to code assistants. GPT-4o brings real-time responses, advanced function calling, and strong multilingual support. Both models shine in natural language understanding, creative writing, and structured data tasks.

Their APIs are developer-friendly, with robust documentation and SDKs for Python, Node.js, and more—ideal for conversational AI, search, and automation apps.

Key Parameters:

Best For

Content generation, conversational AI, text analysis

LLM APIs – Rate-Limit & Fallback Guidance

When you call Gemini Pro, GPT-4o, or any public LLM endpoint at scale, two operational realities kick in:

Building those safeguards early prevents overnight outages and surprise overages—an often-missed refinement that separates prototypes from production-ready apps.

3. Self-Hosting LLMs

Self-hosting large language models gives greater data control, improved privacy, and eliminates reliance on third-party servers. Bringing LLMs on-premises supports compliance, safeguards sensitive information, and enables offline experimentation—vital for enterprises and AI developers focused on secure, customisable solutions.

3.1: Ollama

Ollama lets you run powerful LLMs locally, keeping your data private and off the cloud. It’s Docker-friendly, easy to set up, and supports a range of open-source models. Perfect for privacy-first apps, regulated industries, or anyone wanting total control over their AI stack.

Key Parameters:

Best For

Privacy-sensitive applications, offline AI deployment

3.2: LM Studio

LM Studio brings GPU-accelerated LLMs to your laptop or desktop. With support for NVIDIA RTX and Apple Silicon, you can run models like Llama, Gemma, and Mistral smoothly—even upload documents for private analysis. LM Studio is ideal for offline workflows, local dev, and experimenting with new models.

Key Parameters:

Best For

GPU-accelerated inference, local development, model testing

4. Orchestration Frameworks

Orchestration frameworks allow for modular workflows, logical chaining, and smart automation between AI services, databases, and APIs. They streamline pipeline creation, automate repetitive tasks, and support building advanced AI agents, optimising operational efficiency in scalable AI development environments.

4.1: LangChain

LangChain is the backbone for building advanced AI workflows. It lets you chain prompts, manage memory, and create agentic pipelines—think RAG apps, document QA, or multi-step assistants.

LangChain’s modular design supports integration with LLMs, vector stores, APIs, and more It’s a hit with devs building retrieval-augmented generation (RAG) systems and agent-based AI tools. Python and TypeScript support means you can use it across stacks.

Key Parameters:

Best For

Complex AI workflows, RAG systems, multi-step reasoning

4.2: LlamaIndex

LlamaIndex bridges LLMs with your private or custom data. It supports 160+ data connectors (APIs, SQL, PDFs, etc.), making it easy to build RAG pipelines and document Q&A bots.

LlamaIndex’s context augmentation ensures your AI app can answer queries using up-to-date, domain-specific info. Its built-in memory buffer and simple API make it a favourite for enterprise search, knowledge bases, and custom chatbots.

Key Parameters:

Best For

Enterprise data integration, knowledge bases, document analysis

5. Vector Databases & Retrieval

Vector databases underpin efficient search, retrieval-augmented generation (RAG), and semantic matching in AI apps by storing, indexing, and querying high-dimensional embeddings. Their precise clustering and rapid similarity search are crucial for intelligent document search, recommendation engines, and advanced data exploration systems.

5.1: Chroma

Chroma is an open-source vector database built for AI. It stores embeddings, powers semantic search, and integrates natively with Python and JavaScript. Chroma’s blazing speed, simple SDK, and support for local or scalable backends make it a top pick for RAG, recommendation engines, and AI memory.

Key Parameters:

Best For

Semantic search, RAG applications, document similarity

5.2: Weaviate

Weaviate is a scalable, enterprise-grade vector database with hybrid search (text + vector), GraphQL API, and auto-scaling. It’s cloud-ready, supports multi-cloud, and is used by leading AI teams for knowledge graphs and semantic search at scale.

Key Parameters:

Best For

Enterprise search, recommendation systems, content discovery

6. UI Development Interfaces

UI development tools facilitate the creation of interactive interfaces for AI models. They make complex AI functionality accessible and visually engaging, speeding up user feedback cycles and boosting end-user adoption—crucial factors when launching machine learning-powered software and demos.

6.1: Gradio

Gradio makes it dead simple to create interactive UIs for your AI models. With drag-and-drop components and instant shareable links, you can demo models, collect user feedback, and deploy prototypes in minutes—all in Python. Gradio is the fastest way to get your AI in front of users.

Key Parameters:

Best For

ML model demos, rapid prototypes, user testing

6.2: Dash (by Plotly)

Dash is the go-to for building data-rich, visually stunning AI dashboards. It blends Python’s data science stack with interactive web components, letting you craft analytics tools, ML dashboards, and custom AI frontends.

Dash’s enterprise features include authentication, deployment, and scalable hosting—making it perfect for production-grade AI visualisation.

Key Parameters:

Best For

Analytics dashboards, business intelligence, data exploration

7. MLOps & Deployment

MLOps and deployment solutions automate training, scheduling, monitoring, and scaling of AI models across pipelines. Streamlining deployment cuts errors, enables version control, and ensures robust, reliable AI software reaches end users securely, fuelling successful long-term AI app performance.

7.1: Airflow

Airflow orchestrates complex ML pipelines with visual scheduling, monitoring, and extensibility. It’s ideal for automating data ingestion, model training, and deployment workflows.

With Python-based DAGs, you can track every step, trigger retraining, and integrate with cloud or on-prem resources. Airflow’s extensible plugin system supports everything from notifications to custom operators—keeping your AI app’s backend humming.

Key Parameters:

Best For

Data pipelines, ML workflow orchestration, batch processing

7.2: SageMaker (AWS)

AWS SageMaker is the enterprise workhorse for end-to-end AI lifecycle management. It covers model training, deployment, monitoring, and auto-scaling—plus built-in tools for experiment tracking and drift detection.

SageMaker’s managed infrastructure means you can go from notebook to production with minimal ops overhead, making it a favourite for startups and Fortune 500s alike.

Key Parameters:

Cost-watch tip: “Attach AWS Budgets and a CloudWatch alarm (e.g., 20% over forecast) so long-running training jobs don’t surprise you at month-end.”

Best For

Enterprise ML deployment, AutoML, managed infrastructure

How to Choose the Right AI App Development Tools

Wrapping up

You've got the blueprint for dominating AI app creation – now put those tools for building AI apps into action. Imagine crafting apps that wow users and scale effortlessly, backed by smart machine learning tricks and seamless integrations. Stats show pros using optimized AI app development tools cut costs by 30% and speed up launches.

Ready to build your next AI app? With these 7 power tools, you’ll be shipping smarter, faster, and with more confidence than ever.