Artificial intelligence is touted as a game-changing force, with companies globally pouring billions into AI initiatives. Tech giants are set for an unprecedented $320 billion AI infrastructure spending spree in 2026 alone. Yet, a stark reality lurks behind the hype—the AI Project Failure Rate has reached catastrophic levels.

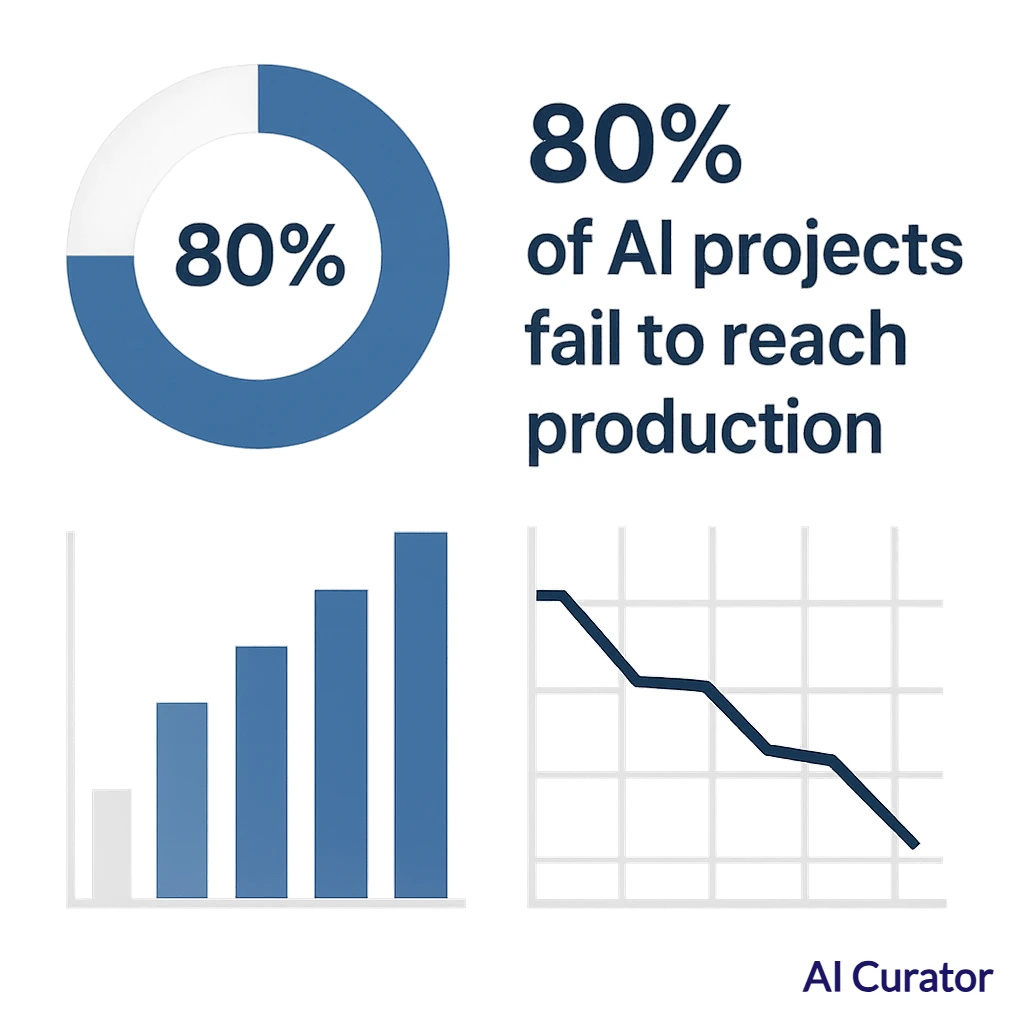

Over 80% of all AI projects fail to reach production, a rate twice that of standard IT projects. This execution gap represents not just wasted resources but a significant roadblock to realizing AI's transformative potential in today's competitive landscape.

🔍 Key Takeaways

📊 The Sobering Statistics of the AI Chasm

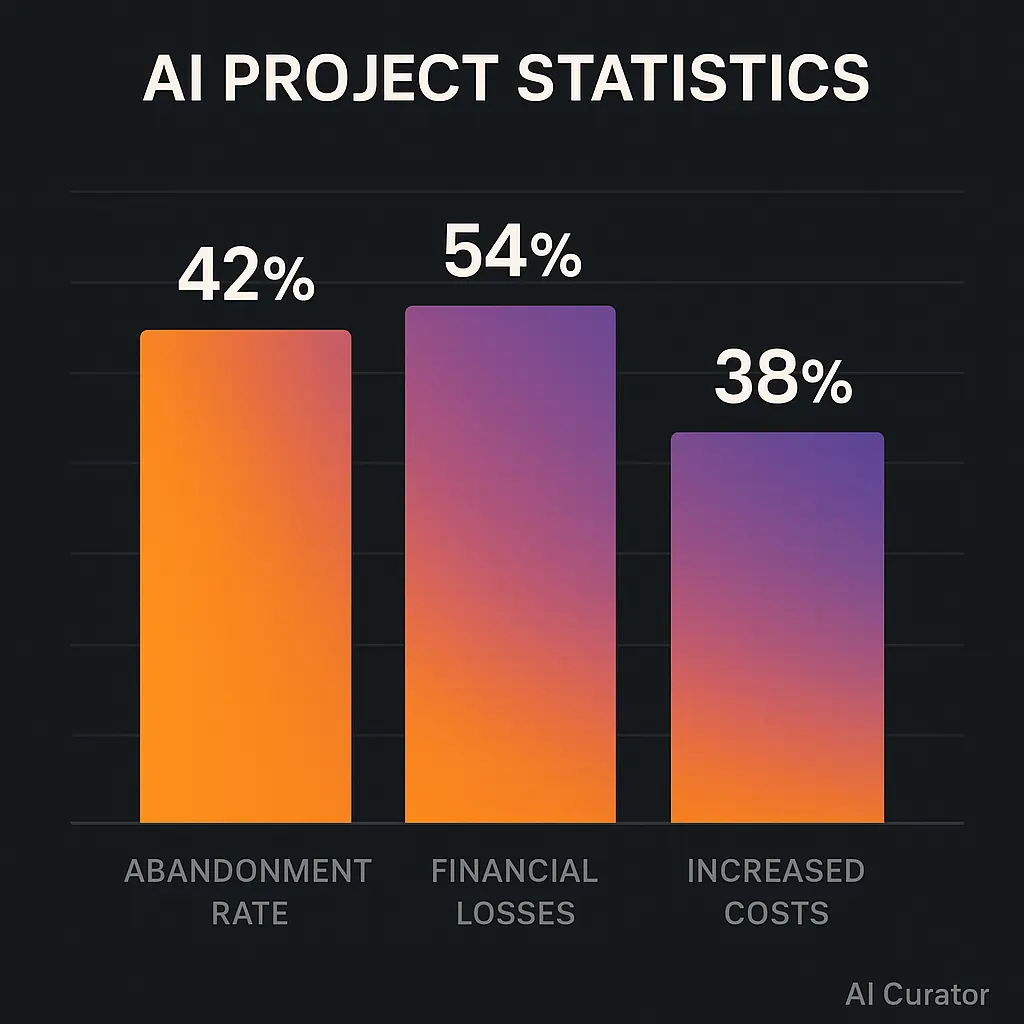

The numbers paint a grim picture of the current state of AI implementation. According to S&P Global Market Intelligence, the proportion of companies abandoning the majority of their AI initiatives will soar above 42% in 2026, a dramatic increase from 17% the previous year. This signals a growing struggle, even as investments climb.

Further research highlights the financial fallout. A survey revealed that 54% of senior executives at major firms have reported financial losses stemming from inadequate governance of their machine learning applications. Fivetran's research adds another layer, showing that 38% of enterprises point to increased operational costs as a direct result of AI project failures.

The Rand Corporation puts the overall failure rate for AI projects at over 80%, a figure that has become an industry benchmark for the challenges at hand. Even with the rise of generative AI, Gartner predicts that 30% of these projects will be abandoned by 2026 due to a mix of technical complexity and ethical hurdles.

🤖 Why Do So Many AI Ventures Fall Short?

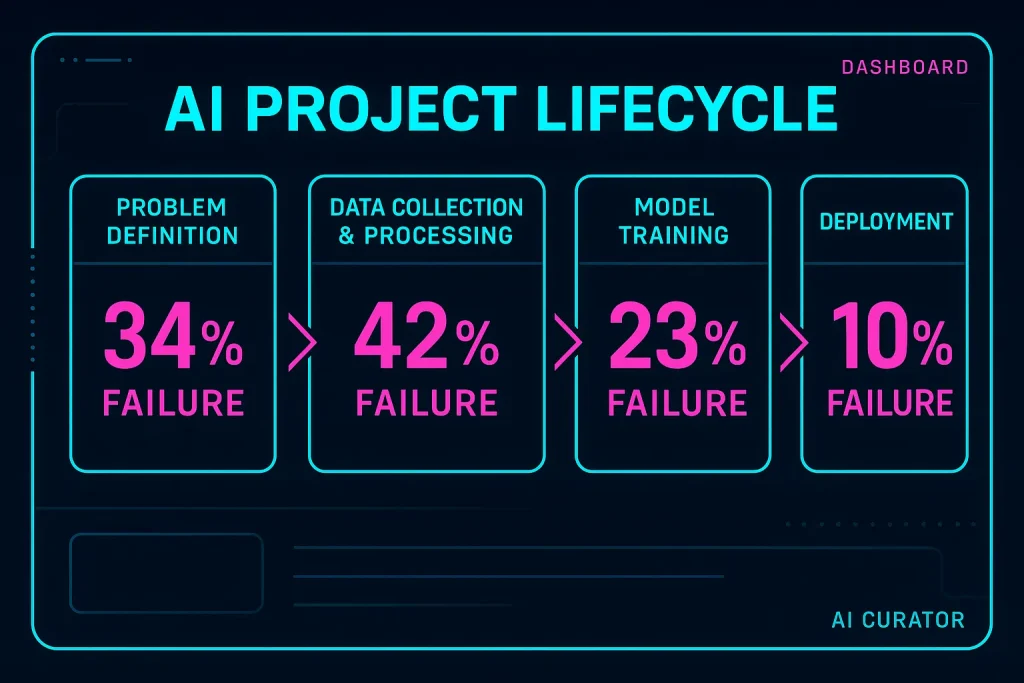

The reasons for this widespread failure are multifaceted, touching on everything from foundational data issues to flawed strategic thinking. Understanding these pitfalls is the first step toward bridging the gap.

🗂️ The Data Dilemma: Garbage In, Garbage Out

At the heart of most AI project failures lies a data problem. AI models are only as good as the data they are trained on, and many organisations are discovering their data is not up to the task. A staggering 88% of organizations are implementing AI, but 54% are concerned about the quality and reliability of the data they're using.

Key Data-Related Challenges Include

🔄 Misaligned Strategies and Unclear Objectives

Too often, AI projects are launched as technological experiments rather than solutions to specific business problems. This lack of clear purpose is a primary driver of failure. When an initiative is not anchored to a measurable business outcome or a clear ROI, it becomes difficult to justify continued investment, leading to its eventual cancellation.

This issue is compounded by a tendency to overpromise what AI can achieve without setting realistic success criteria. Executives may be enthusiastic, but without defined objectives, teams are left directionless, and projects stall in “pilot mode paralysis“. An effective AI strategy requires identifying a clear problem first and then determining if AI is the right tool to solve it.

👥 The Human Element: People, Skills, and Culture

Technology alone cannot guarantee success. The AI execution gap is also a human problem. A successful AI deployment needs a blend of technical skills—like data engineering and machine learning—and deep domain expertise from within the organisation. This combination is rare, and many companies lack the necessary in-house talent.

Furthermore, AI adoption is a significant change management challenge. When leadership pushes for AI from the top down without equipping employees with the right tools or training, momentum quickly dies.

As one human capital strategist noted, the very definition of adoption is getting people to work in a different way, yet the “people” aspect is often the most overlooked link in the chain.

🚧 Technical and Infrastructural Hurdles

The leap from a successful proof-of-concept (PoC) to a fully scaled production system is where many projects falter. A Reddit user aptly described this challenge, noting that a PoC is simple to build, “but then you realize that the last 10% will take 600% of the time and money“. The technical complexity of deploying and maintaining AI models in a live environment is frequently underestimated.

Issues such as algorithm complexity, scalability, and the high costs of running sophisticated models present significant barriers. Moreover, many current AI models based on next-token prediction have inherent limitations in tasks that require long-term planning and reasoning, which can lead to compounding errors and project failure.

These technical difficulties are why, on average, organizations scrap 46% of their AI PoCs before they ever reach production.

Lessons From the Trenches

Beyond the statistics, real-world experience offers invaluable lessons. A retrospective on dataset curation from a developer on Hugging Face highlights how critical infrastructure is. An early failure, which resulted in the loss of weeks of manually labelled data, taught them to treat annotation projects with the same seriousness as production systems, complete with automated backups and version control.

Discussions on forums like Reddit echo these technical concerns, with users pointing to the difficulty of getting models to meet business needs and the sheer effort required to bridge the gap between a promising demo and a production-grade system. These firsthand accounts underscore the need for meticulous planning, robust infrastructure, and a deep understanding of the problem domain.

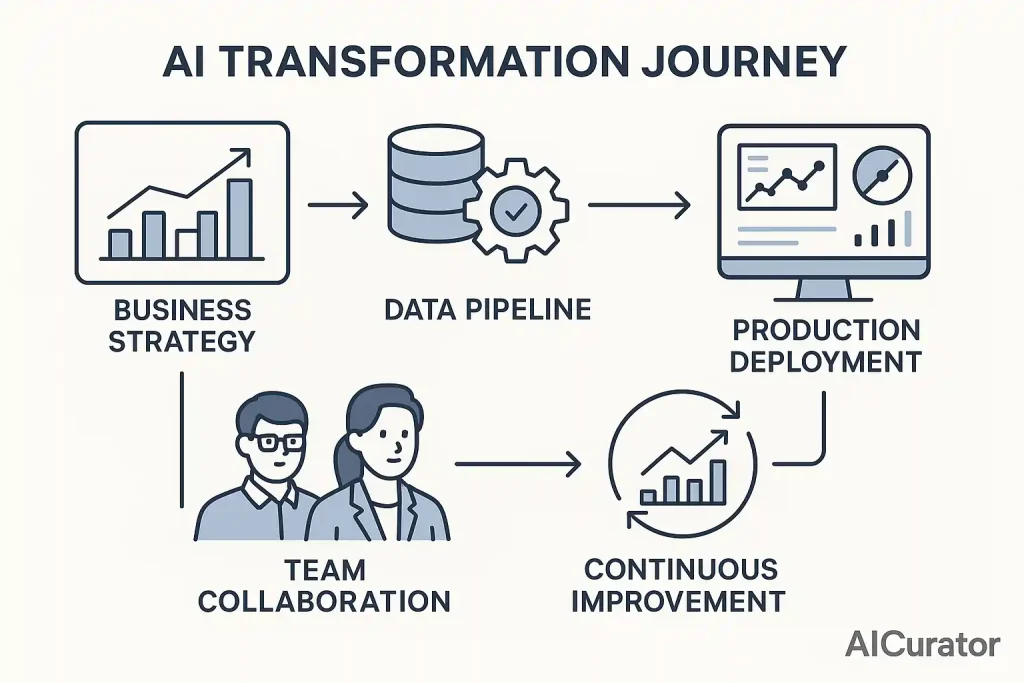

From Ambition to Impact: A Pragmatic Playbook

- Measure and iterate. Track post-deployment drift, usability, and business outcomes; sunset models that stop adding value.

- Start with the business tension. Define the KPI a model must move, quantify potential ROI, and green-light only projects surpassing a hurdle rate.

- Institute data contracts and lineage tooling. Hold upstream systems accountable for schema changes; automate quality checks before model retraining.

- Design for production at PoC time. Establish CI/CD pipelines for models, set SLOs, and budget for monitoring and retraining.

- Upskill and embed. Pair data scientists with domain SMEs; run change-management sprints so operators trust model outputs.

More from AICurator:

Conclusion

The 80 % failure statistic is not an indictment of AI technology but of organisational readiness and strategic clarity. By grounding projects in measurable objectives, investing in data quality, and prioritising human adoption, enterprises can bridge the chasm between intent and impact—and finally translate AI ambition into sustainable advantage.