Ever notice how most AI assistants can chat but freeze when you ask for real answers or real-world actions? You’re not alone—over 80% of users say their AI tools fall short when it comes to live info or calculations.

But what if your AI could:

Welcome to the next level: the Multi-Tool AI Agent. With Nebius, Llama 3, and LangChain, you’ll create an assistant that doesn’t just talk—it acts.

Ready to build an AI that’s more than just a chatbot? Let’s get started!

What Makes a Multi-Tool AI Agent Different?

Before we jump into the code, let's get one thing straight. A multi-tool AI agent is not your average chatbot. While a standard AI agent might rely on a single Large Language Model (LLM) to answer questions based on its training data, a multi-tool or agentic AI is more like a project manager with a team of specialists.

It uses a central LLM as a “reasoning engine” to understand a user's request, break it down into smaller steps, and decide which “tool” is best for each step. These tools can be anything: a web search API, a calculator, a code interpreter, or a custom database retriever.

This approach moves beyond simple Retrieval-Augmented Generation (RAG), where an AI just fetches information, into the realm of real-time reasoning and action.

Here’s a quick look at the difference:

| Aspect | Standard AI Agent | Agentic AI (Multi-Tool) |

|---|---|---|

| Core Engine | Single LLM | Multiple LLMs, potentially diverse |

| Coordination | Isolated task execution | Hierarchical or decentralised coordination |

| Memory Usage | Optional memory or tool cache | Shared episodic/task memory |

| Learning Capability | Limited adaptation | Continuous learning from outcomes |

| Workflow Handling | Single task execution | Multi-step workflow coordination |

| Decision Making | Basic tool usage decisions | Goal decomposition and assignment |

By orchestrating multiple tools, your agent becomes dynamic, resourceful, and capable of tackling complex, multi-step problems that a standalone LLM would struggle with.

The Tech Stack: Your Building Blocks

To construct our agent, we need a solid foundation. Here are the key components we'll be using.

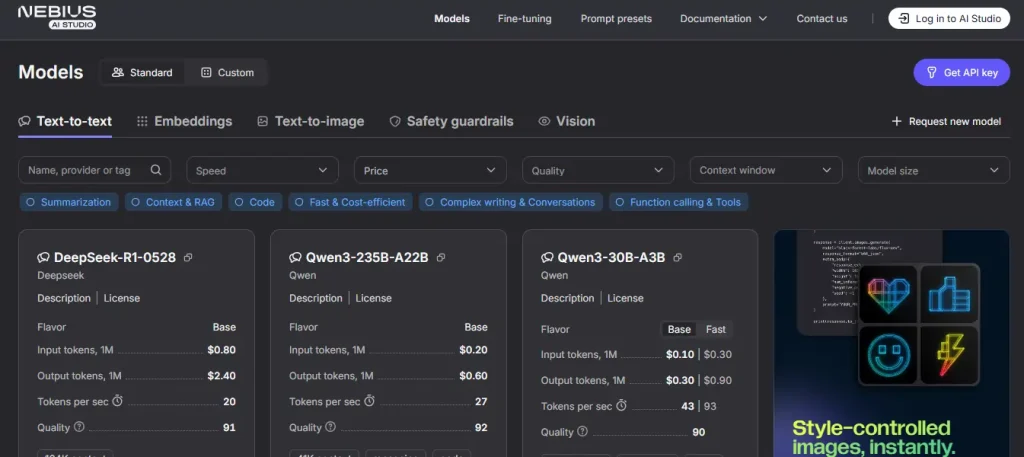

1. Nebius

Nebius provides the core infrastructure for our agent. We'll specifically use:

2. Llama 3 Model

We're using the meta-llama/Llama-3.3-70B-Instruct-fast model. This state-of-the-art model is optimised for instruction following, making it perfect for an agent that needs to understand commands and decide when to use its tools.

While this model is a great generalist, the world of Llama 3 also includes specialised models like Llama-3-Groq-70B-Tool-Use, which achieves over 90% accuracy on function-calling benchmarks, showcasing how fine-tuned models can excel at tool integration.

3. LangChain Framework

LangChain is the glue that holds our entire system together. It's a Python library designed to create complex, stateful AI workflows. Its modular components allow us to easily chain together the LLM, retriever, and tools into a cohesive application.

For even more complex interactions, its sibling library, LangGraph, can be used to build cyclical graphs, enabling agents to loop and refine their actions until a task is complete.

4. Real-Time Reasoning Tools

To make our agent truly useful, we need to give it abilities that extend beyond its pre-trained knowledge. In this tutorial, we will build two essential tools:

Building Your Multi-Tool AI Agent: A Step-by-Step Guide

Alright, let's get our hands dirty and build this thing. Follow along with the code snippets to create your own multi-tool AI agent.

Step 1: Setting Up the Environment

First, we need to install the necessary Python libraries. These include the LangChain packages for Nebius and core functionalities, along with the Wikipedia library for our search tool.

!pip install -q langchain-nebius langchain-core langchain-community wikipediaNext, we import the required modules and securely get your Nebius API key. If the key isn't set as an environment variable, the script will prompt you to enter it.

import os

import getpass

import wikipedia

from datetime import datetime

from typing import List, Dict, Any

from langchain_core.documents import Document

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_core.tools import tool

from langchain_nebius import ChatNebius, NebiusEmbeddings, NebiusRetriever

if “NEBIUS_API_KEY” not in os.environ:

os.environ["NEBIUS_API_KEY"] = getpass.getpass("Enter your Nebius API key: ")Step 2: Designing the AdvancedNebiusAgent Class

We'll encapsulate all our logic within a class called AdvancedNebiusAgent. This keeps our code organised and easy to manage.

Initialising the Agent (__init__)

The constructor sets up all the core components. We initialise the ChatNebius LLM, NebiusEmbeddings, and a NebiusRetriever. Crucially, we also define a ChatPromptTemplate.

This template structures the input we send to the LLM, providing it with context from our knowledge base, results from any tools used, the current date, and the user's query. This detailed prompt guides the LLM to generate a well-reasoned and context-aware response.

class AdvancedNebiusAgent:

"""Advanced AI Agent with retrieval, reasoning, and external tool capabilities"""

def __init__(self):

self.llm = ChatNebius(model="meta-llama/Llama-3.3-70B-Instruct-fast")

self.embeddings = NebiusEmbeddings()

self.knowledge_base = self._create_knowledge_base()

self.retriever = NebiusRetriever(

embeddings=self.embeddings,

docs=self.knowledge_base,

k=3

)

self.agent_prompt = ChatPromptTemplate.from_template("""You are an advanced AI assistant with access to:

- A knowledge base about technology and science

- Wikipedia search capabilities

- Mathematical calculation tools

- Current date/time information

Context from knowledge base:

{context}

External tool results:

{tool_results}

Current date: {current_date}

User Query: {query}

Instructions:

- Use the knowledge base context when relevant

- If you need additional information, mention what external sources would help

- Be comprehensive but concise

- Show your reasoning process

- If calculations are needed, break them down step by step

Response:

""")Creating a Custom Knowledge Base (_create_knowledge_base)

For this tutorial, we create a small, in-memory knowledge base using LangChain's Document objects. Each document contains a piece of text and associated metadata.

This knowledge base provides the agent with specific, curated information on topics like AI, quantum computing, and climate change, which it can retrieve to answer relevant questions.

def _create_knowledge_base(self) -> List[Document]:

"""Create a comprehensive knowledge base"""

return [

Document(page_content="Artificial Intelligence (AI) is transforming industries...", metadata={"topic": "AI"}),

Document(page_content="Quantum computing uses quantum mechanical phenomena...", metadata={"topic": "quantum_computing"}),

# ... more documents on various topics

]Defining the External Tools

Here comes the fun part: giving our agent superpowers. We use the @tool decorator from LangChain to easily define functions as tools that the agent can call.

The wikipedia_search tool takes a query, searches Wikipedia, and returns a concise summary. The calculate tool safely evaluates a mathematical expression, ensuring only basic arithmetic operations are allowed to prevent security risks.

@tool

def wikipedia_search(query: str) -> str:

"""Search Wikipedia for additional information"""

try:

search_results = wikipedia.search(query, results=1)

if not search_results:

return f"No Wikipedia results found for '{query}'"

page = wikipedia.page(search_results[0], auto_suggest=False)

summary = wikipedia.summary(search_results[0], sentences=3)

return f"Wikipedia: {page.title}\n{summary}\nURL: {page.url}"

except Exception as e:

return f"Wikipedia search error: {str(e)}"

@tool

def calculate(expression: str) -> str:

"""Perform mathematical calculations safely"""

try:

allowed_chars = set('0123456789+-*/.() ')

if not all(c in allowed_chars for c in expression):

return "Error: Only basic mathematical operations allowed"

result = eval(expression)

return f"Calculation: {expression} = {result}"

except Exception as e:

return f"Calculation error: {str(e)}"Certainly! Here's the continuation of the article, without citations and with the original content preserved:

Step 3: Orchestrating the AI Workflow

With our components ready, we need to define the process for handling a user's query.

Processing the Query (process_query)

This method is the heart of the agent. It takes a user's query and orchestrates the entire response generation process. It first uses the retriever to find relevant documents from the knowledge base. Then, it checks if the Wikipedia or calculator tools should be used.

Finally, it constructs a LangChain Expression Language (LCEL) chain. This chain pipes together the context, tool results, and query into our prompt template, sends it to the LLM, and parses the output into a string. This declarative style makes the workflow easy to read and modify.

def process_query(self, query: str, use_wikipedia: bool = False, calculate_expr: str = None) -> str:

"""Process a user query with optional external tools"""

relevant_docs = self.retriever.invoke(query)

context = self._format_docs(relevant_docs)

tool_results = []

if use_wikipedia:

wiki_keywords = self._extract_keywords(query)

if wiki_keywords:

wiki_result = wikipedia_search(wiki_keywords)

tool_results.append(f"Wikipedia Search: {wiki_result}")

if calculate_expr:

calc_result = self.calculate(calculate_expr)

tool_results.append(f"Calculation: {calc_result}")

tool_results_str = "\n".join(tool_results) if tool_results else "No external tools used"

chain = (

{

"context": lambda x: context,

"tool_results": lambda x: tool_results_str,

"current_date": lambda x: self._get_current_date(),

"query": RunnablePassthrough()

}

| self.agent_prompt

| self.llm

| StrOutputParser()

)

return chain.invoke(query)Step 4: Creating an Interactive Session

To make the agent usable, we create a simple interactive command-line session. This loop waits for user input and can parse special commands like wiki: and calc: to trigger the corresponding tools. This provides a hands-on way to test and interact with our newly built AI assistant.

def interactive_session(self):

"""Run an interactive session with the agent"""

print("🤖 Advanced Nebius AI Agent Ready!")

print("Features: Knowledge retrieval, Wikipedia search, calculations")

print("Commands: 'wiki:' for Wikipedia, 'calc:' for math")

print("Type 'quit' to exit\n")

while True:

user_input = input("You: ").strip()

if user_input.lower() == 'quit':

print("Goodbye!")

break

use_wiki = user_input.lower().startswith("wiki:")

calc_expr = None

if user_input.lower().startswith("calc:"):

calc_expr = user_input[5:].strip()

user_input = f"Calculate: {calc_expr}"

try:

response = self.process_query(user_input, use_wikipedia=use_wiki, calculate_expr=calc_expr)

print(f"\n🤖 Agent: {response}\n")

except Exception as e:

print(f"Error: {e}\n")Putting Your Agent to the Test

Now, let's see our agent in action. The provided example code includes a demo that runs several queries to showcase the agent's different abilities.

- Knowledge Base Query: Asking “What is artificial intelligence and how is it being used?” will trigger the retriever to pull context from our custom knowledge base.

- Wikipedia-Enhanced Query: A query like “What are the latest developments in space exploration?” with

use_wikipedia=Truewill make the agent call the Wikipedia tool for real-time information. - Calculation Query: A request to calculate a 25% improvement on a 20% efficiency, along with

calculate_expr="20 * 1.25", will demonstrate the calculator tool.

These demos clearly illustrate how the agent dynamically combines its internal knowledge with external tools to provide comprehensive and accurate answers.

Conclusion

You've now seen how to build a powerful, multi-tool AI agent from the ground up. By combining the robust infrastructure of Nebius, the advanced reasoning of Llama 3, and the flexible orchestration of LangChain, we've created an assistant that goes far beyond static responses.

It can reason, retrieve curated knowledge, access live information from the web, and perform calculations—all within a single, coherent workflow.

This tutorial provides a strong blueprint for developing even more sophisticated AI systems. You can extend this agent by adding more tools, connecting to databases, or using a framework like LangGraph to build more complex, looping workflows.

The era of intelligent, action-oriented AI agents is here, and with these tools, you have everything you need to start building them.