The explosion in artificial intelligence popularity has created a gold rush for productivity-boosting tools. Everyone wants a piece of the AI pie. But this frenzy has a dark side. Cybercriminals, always quick to exploit a trend, are flooding the internet with bogus AI software.

These convincing fakes promise Innovative features but deliver a nasty payload of malware, turning user curiosity into a security nightmare.

Key Takeaways

The New-Age Heist: How AI Malware Scams Operate

The strategy behind these scams is deceptively simple yet effective. Cybercriminals leverage the hype surrounding AI to lure unsuspecting victims. They create advertisements on social media platforms, sometimes using compromised or verified accounts to appear legitimate. These ads promote seemingly revolutionary AI tools for video generation, image editing, or content creation.

When a user clicks on an ad, they are often directed to a professionally designed website that mimics a real AI service. Many of these fake platforms even have a functional front-end, allowing users to enter prompts or upload files, creating a false sense of security.

The trap is sprung at the final step. Instead of receiving AI-generated content, the user is prompted to download a file that contains the malware. This file is often disguised, for example, as “VideoDreamAI.zip,” which contains an executable like “Video Dream MachineAI.mp4.exe.”

Rogues' Gallery: Notorious Examples of Fake AI Malware

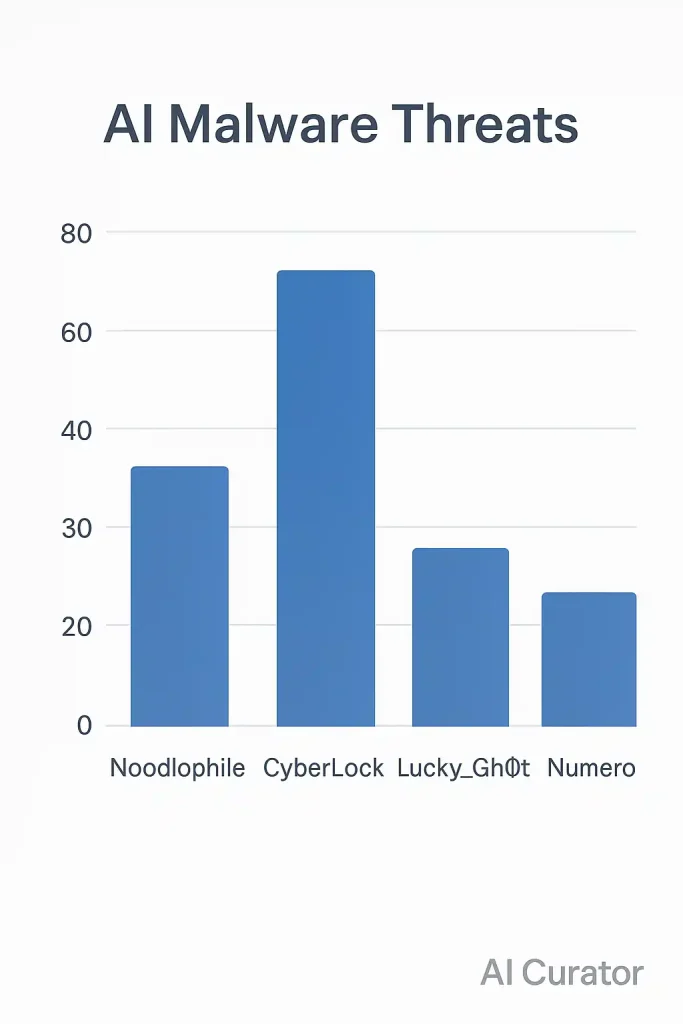

The versatility of these attacks is a key reason for their persistence. Criminals constantly rotate the AI tools they impersonate and the domains they use, making them difficult to track. However, several prominent malware strains have emerged from these campaigns.

Noodlophile Stealer

Discovered by Morphisec researchers, Noodlophile is an information-stealing malware often distributed through ads for generative AI services. After a user interacts with the fake AI platform and downloads the supposed output, the Noodlophile malware gets to work.

It pilfers browser cookies, saved passwords, and other sensitive information, sending it back to the attackers via encrypted messaging. In some cases, it also creates a backdoor for future malware installations.

CyberLock Ransomware

This ransomware is a common payload in fake AI scams. Attackers create spoofed websites of legitimate companies, such as the AI-driven monetisation platform NovaLeads. The fake site, “novaleadsai[.]com,” looks nearly identical to the real one, “novaleads.app.”

Clicking the download button installs the CyberLock ransomware. Once activated, it encrypts the victim's files and demands a hefty ransom, sometimes as high as $50,000, for their release.

Lucky_Gh0$t

Posing as the immensely popular ChatGPT, Lucky_Gh0$t capitalises on the chatbot's widespread recognition. It's easy to trick people into downloading something that claims to be from such a well-known brand, even from an unofficial source.

Once executed, Lucky_Gh0$t scans the infected device for files under 1.2 gigabytes, encrypts them, and demands a ransom. For larger files, it simply deletes them, causing irreversible data loss.

Numero

Unlike the ransomware strains, Numero is a purely destructive malware that impersonates the real video-generating app, InVideo AI. It doesn't steal data or demand money. Instead, it manipulates open windows in an infinite loop, which eventually makes the infected device completely unusable.

Numero also uses clever tricks to evade detection, often causing damage before the user realises what's happening.

The Bigger Picture: AI's Role in Cyber Warfare

The use of fake AI software is just one facet of a much larger issue. AI itself is becoming a powerful weapon in the hands of cybercriminals. They use it to automate the discovery of system vulnerabilities and craft highly convincing, personalised phishing emails that are difficult to distinguish from legitimate communications.

AI-powered deepfake technology can create realistic fake videos and voice recordings of trusted individuals, manipulating victims into transferring funds or revealing confidential information. One of the more alarming developments is polymorphic malware, which uses AI to continuously change its code to avoid detection by traditional antivirus software.

IBM researchers have even demonstrated a proof-of-concept AI-powered malware called DeepLocker, which can hide within a benign application and only activate when specific, AI-determined conditions are met, such as recognising a particular user via webcam.

Your Digital Shield: How to Stay Safe from Fake AI Scams

With cybercriminals getting smarter, user vigilance is more important than ever. In 2024 alone, scammers extorted or stole an estimated $1.03 trillion. Following these fundamental security practices can significantly reduce your risk of falling victim.

Conclusion

The rise of AI has unlocked incredible possibilities, but it has also opened a new front in the ongoing battle for cybersecurity. The use of fake AI software to distribute malware is a stark reminder that as technology moves forward, so do the methods of those who seek to exploit it.

Cybercrime remains an incredibly profitable enterprise, meaning these threats are here to stay. Ultimately, the strongest defence is a well-informed and cautious user. By embracing a healthy dose of skepticism and adhering to basic security hygiene, you can navigate the exciting world of AI safely and keep your digital life secure.