Why do most companies fail miserably when implementing artificial intelligence systems? The answer lies in critical obstacles that derail even the most ambitious AI projects. From ethical concerns to talent shortages, these barriers cost businesses millions and destroy competitive advantages.

Smart organisations recognise these pitfalls early and build strategic solutions that guarantee success.

Here's your complete roadmap to avoiding costly AI implementation mistakes that sink projects before they start.

The 8 AI Implementation Challenges Crushing Business Dreams

These roadblocks are costing companies millions and forcing executives to abandon AI projects faster than they can say “machine learning.”

1. Ethical Concerns and Algorithmic Bias

Algorithmic bias represents one of the most pressing ethical dilemmas in AI implementation. AI systems trained on historical data often inherit societal biases, leading to discriminatory outcomes in critical areas like hiring, lending, and criminal justice.

Real-world examples demonstrate the severity of this issue. Amazon's AI recruiting tool penalised women candidates, with 60% of selections favouring male applicants due to biased historical hiring data.

Similarly, the Dutch childcare benefits scandal saw families wrongly accused of fraud based on algorithmic decisions that considered dual nationality and low income as risk factors.

Why This Is a Challenge

Biased AI systems expose organisations to discrimination lawsuits and regulatory penalties. Only 47% of companies currently test for bias, leaving most vulnerable to unintended discriminatory outcomes that damage reputation and legal standing permanently.

Company tackling this: IBM

2. Access to Quality Data

Data quality issues plague AI implementation efforts across industries. Poor data quality costs the US economy approximately $3.1 trillion annually, highlighting the massive impact of inadequate information on AI performance.

AI systems require vast amounts of high-quality, relevant data for training and decision-making. However, organisations frequently struggle with outdated, incomplete, or irrelevant datasets that lead to poor AI performance and unreliable outcomes.

42% of companies report inadequate proprietary data for customising AI models. This shortage forces organisations to rely on generic models that may not address their specific business needs or industry requirements.

Why This Is a Challenge

Data silos, legacy system incompatibilities, and regulatory restrictions limit access whilst quality issues demand expensive cleanup efforts. Poor data directly correlates with unreliable AI performance, making business decisions risky and implementation costly.

Company tackling this: Databricks

3. AI Explainability and Transparency

AI transparency encompasses the ability to understand how AI systems make decisions, process data, and produce specific results. Many AI models, particularly deep learning systems, operate as “black boxes” where decision-making processes remain opaque to users and stakeholders.

Explainable AI (XAI) requirements vary significantly across industries. Healthcare and insurance sectors mandate transparency for regulatory compliance, whilst other industries focus on building user trust and accountability.

The challenge extends beyond technical explainability to include data governance, algorithmic accountability, and stakeholder communication. Organisations must balance model performance with interpretability requirements.

Why This Is a Challenge

Lack of transparency creates stakeholder distrust and regulatory compliance issues. Industries like healthcare require clear decision explanations, whilst implementing explainable AI demands specialised expertise and additional resources, often sacrificing model performance.

Company tackling this: FICO

4. Data Privacy and Security

AI systems process vast amounts of personal and sensitive data, creating significant privacy and security vulnerabilities. Data breaches in AI systems can expose training data, model parameters, and user interactions, leading to severe consequences for individuals and organisations.

Privacy risks include data leakage, where AI models inadvertently reproduce snippets of training data containing sensitive information. Model poisoning attacks manipulate training data to compromise AI system integrity and performance.

40% of companies identify data privacy concerns as a major AI implementation barrier. These concerns stem from regulatory requirements, customer expectations, and potential security vulnerabilities in AI infrastructure.

Why This Is a Challenge

GDPR and HIPAA impose strict requirements with substantial non-compliance fines. Security vulnerabilities create multiple attack vectors whilst privacy-preserving technologies like differential privacy increase infrastructure costs and deployment complexity significantly.

Company tackling this: Microsoft

5. Talent Shortage in AI and ML Fields

The AI talent gap has reached crisis levels in 2026. Globally, 4.2 million AI positions remain unfilled whilst only 320,000 qualified developers are available. This shortage costs companies an average of $2.8 million annually in delayed AI initiatives.

87% of organisations struggle to hire AI talent, with average time-to-fill reaching 142 days. The skills gap affects multiple roles: machine learning engineers, data scientists, AI researchers, and AI ethics specialists.

Geographic concentration exacerbates the problem. 65% of qualified AI developers are concentrated in only 5 metropolitan areas, limiting access for companies in other regions.

Why This Is a Challenge

Skills become outdated within 15 months whilst big tech companies absorb 70% of top graduates. Universities produce 40% fewer AI-ready candidates than market demands, creating intense competition and 32% salary inflation.

Company tackling this: HCLTech

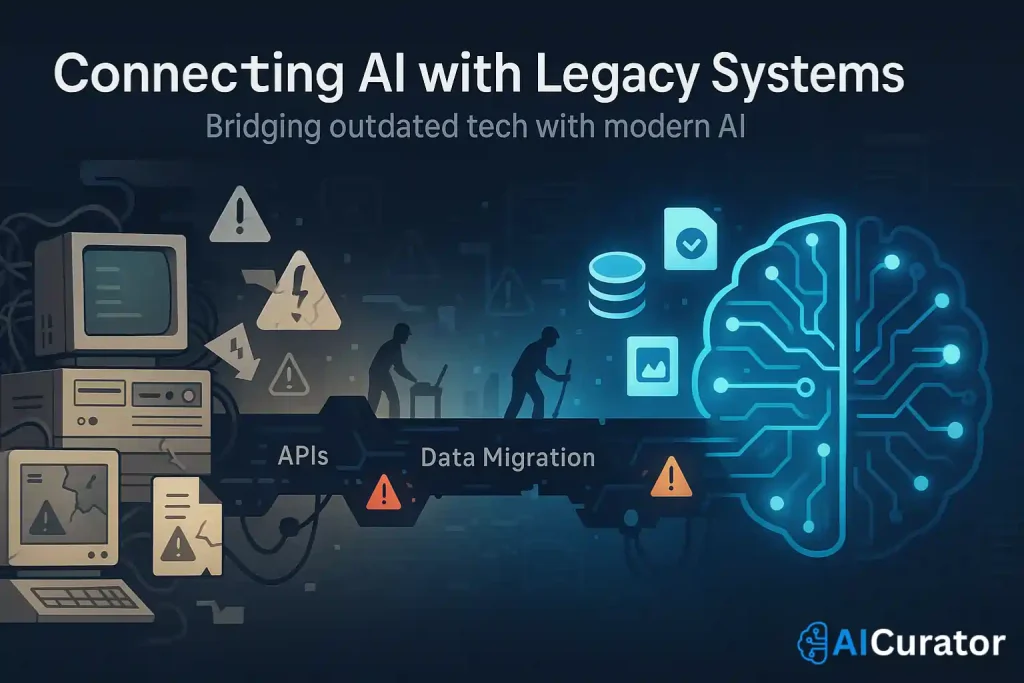

6. Integration with Existing Systems

Legacy system integration represents a major technical hurdle for AI implementation. 42% of companies face difficulties integrating AI due to technical incompatibilities between modern AI technologies and existing infrastructure.

Many established enterprises rely on traditional systems that lack compatibility with advanced AI solutions. These systems often use outdated data formats, limited APIs, and insufficient processing capabilities for AI workloads.

Data integration challenges multiply when connecting AI systems to multiple data sources. Organisations must reconcile different formats, structures, and update frequencies whilst maintaining data quality and consistency.

Why This Is a Challenge

Legacy systems require costly infrastructure upgrades and lack computational power for AI workloads. Custom development work, system testing, and data migration extend implementation timelines whilst straining IT resources and budgets.

Company tackling this: Pragmatic Coders

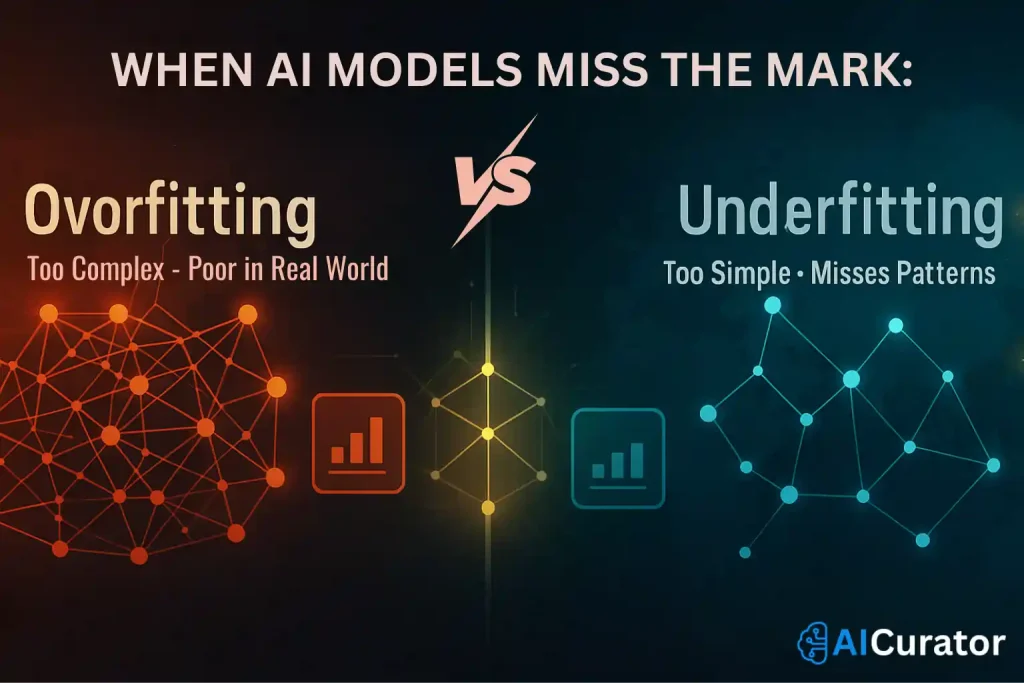

7. Overfitting and Underfitting in Models

Model performance issues significantly impact AI implementation success. Overfitting occurs when AI models become overly specialised in training data and fail to generalise to new inputs. This creates systems that perform excellently on historical data but poorly in real-world applications.

Underfitting represents the opposite problem, where models are too simplistic to capture complex patterns in data. These models struggle to make accurate predictions even on training datasets.

Both issues stem from poor data quality, inadequate model design, and insufficient validation processes. Organisations often lack the expertise to properly balance model complexity with generalisation capabilities.

Why This Is a Challenge

Overfitted models fail in production environments, creating unreliable systems and poor user experiences. Detection requires specialised expertise whilst continuous model retraining and optimisation significantly increases ongoing maintenance costs.

Company tackling this: Lyzr

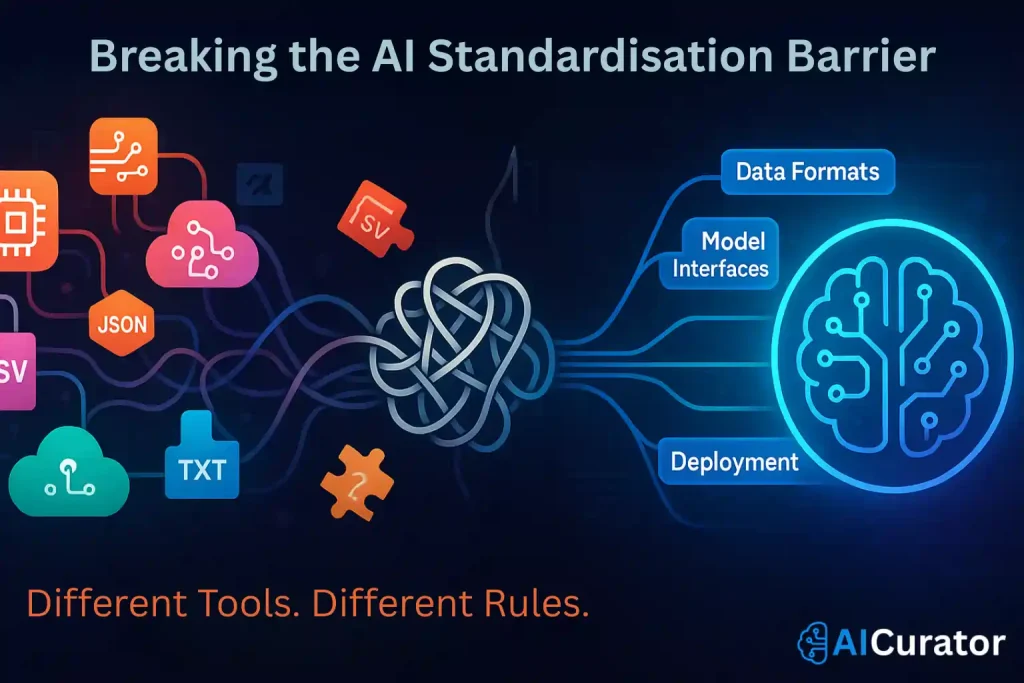

8. Lack of Standardisation Across AI Tools and Platforms

The AI industry lacks unified standards for data formats, model interfaces, and deployment processes. This fragmentation creates major obstacles when integrating AI systems, as tools differ in functionality, data requirements, and output formats.

Without clear guidelines, organisations struggle with longer development timelines and must create custom solutions to address compatibility issues. This leads to inconsistent standards for inputs and outputs across systems.

Platform diversity compounds integration challenges. Different AI frameworks use varying approaches to model training, inference, and deployment, making it difficult to combine multiple AI tools effectively.

Why This Is a Challenge

Fragmented standards increase development costs through custom interfaces whilst creating vendor lock-in risks. Teams using different AI frameworks struggle with collaboration, making model sharing and result comparison complex and inefficient.

Company tackling this: Meta

Profits in Overdrive: AI’s Massive ROI & Operational Edge

Deploying AI isn’t just about staying current—it's about unlocking new levels of profit and efficiency.

Recommended Readings:

Final Words

AI implementation challenges require strategic planning and systematic approaches to overcome. Successful organisations address these obstacles through comprehensive governance frameworks, skilled team development, and phased deployment strategies.

The key to success lies in acknowledging these challenges early and building solutions into AI strategy from the outset. Companies that proactively address ethical concerns, data quality, talent gaps, and technical integration issues achieve better outcomes and faster ROI from their AI investments.

As AI technologies continue advancing, organisations must remain adaptable and invest in continuous learning to navigate these evolving challenges successfully.