In my experience, the conversation around AI has shifted. Prompt engineering is no longer just about crafting clever questions; it has matured into a core engineering discipline essential for building reliable, scalable AI applications.

As we move through 2026, the key challenge isn't just getting a good response from an LLM, but systemizing that process. How do you manage thousands of prompts across a team, test their effectiveness, and integrate them into complex workflows?

This is where specialized tools come in. They are the bridge between a simple AI command and a production-grade application. Having explored the industry, I've identified the platforms that truly define modern prompt engineering.

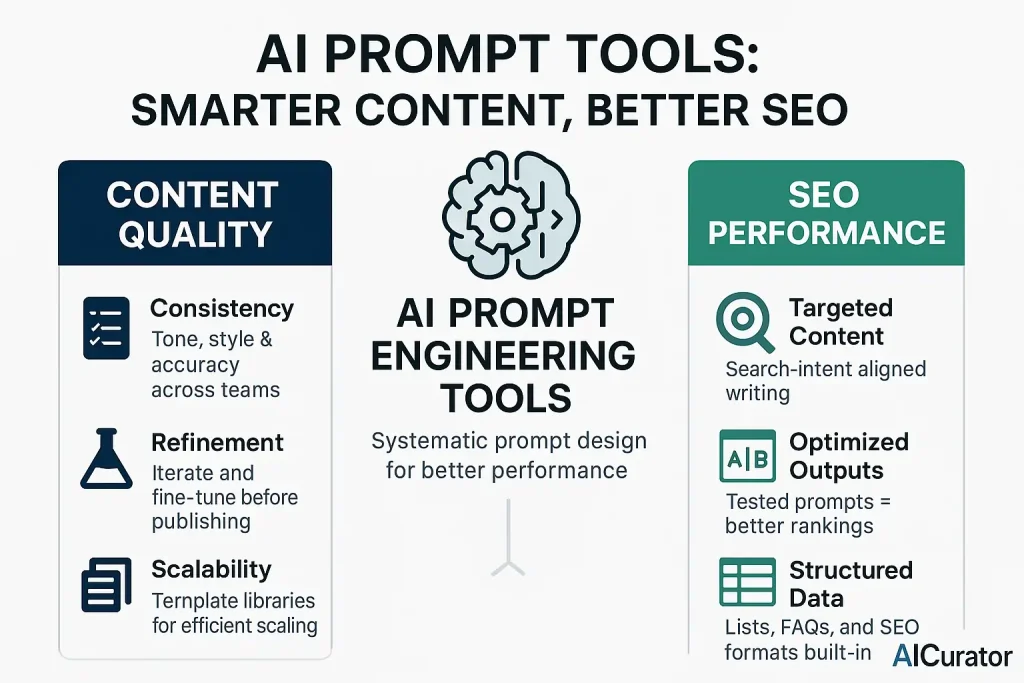

How AI Prompt Engineering Tools Improve Content Quality and SEO?

AI prompt engineering tools enhance content quality and search engine optimization (SEO) by providing a systematic framework for interacting with language models. They move beyond simple prompt-response cycles to a more controlled, data-driven process.

Content Quality:

SEO Performance:

Take Control of AI Outputs with These Prompt Builders

| 🔧 Best AI Prompt Engineering Tools | 💡 Primary Purpose | 👥 Best For |

|---|---|---|

| LangChain | Developer Framework | Complex Applications |

| PromptLayer | Collaborative Workbench | Team Collaboration |

| Agenta | Open-Source LLMOps | Production Apps |

| PromptBase | Prompt Marketplace | Ready-Made Solutions |

| AIPRM | ChatGPT Extension | Content Creators |

| OpenPrompt | Research Framework | Academic Research |

1. LangChain: The Developer's Framework for Building LLM Applications

LangChain is less a single tool and more a comprehensive, open-source framework designed for developers to build sophisticated applications powered by large language models (LLMs). It stands out by enabling the creation of complex, data-aware, and agentic workflows that go far beyond simple prompt-and-response interactions.

At its core, LangChain allows developers to “chain” together different components, creating intricate sequences of operations. This modular approach is its greatest strength. Developers can connect LLMs to external data sources, allow LLMs to interact with their environment, and build agents that use tools to perform tasks.

Key Features and Concepts:

Who Should Use LangChain?

LangChain is ideal for developers and engineers who need to build custom, complex, and powerful LLM-powered applications. Its flexibility is unmatched for tasks requiring multi-step reasoning, data retrieval, and interaction with other systems.

⚠️ However, this power comes with a steeper learning curve and requires coding knowledge, making it less suitable for beginners or non-technical users.

2. PromptLayer: The Collaborative Workbench for AI Engineering

PromptLayer is a platform designed to bring prompt engineering into a collaborative, professional workflow, often described as the “GitHub for prompts.” It serves as a central workbench for teams to manage, evaluate, and optimize their prompts, providing a layer of observability and control over LLM usage.

The platform's primary goal is to empower entire teams—including non-technical stakeholders like product managers and domain experts—to participate in the prompt iteration process. This collaborative approach can dramatically speed up development cycles and improve the quality of AI features.

Key Features and Concepts:

Who Should Use PromptLayer?

PromptLayer is best suited for teams building and scaling AI-powered products. It is particularly valuable for companies where collaboration between engineering, product, and domain experts is crucial.

Organizations like Gorgias and ParentLab have used PromptLayer to scale their AI operations, reduce engineering overhead, and improve personalization by allowing non-technical teams to own prompt iteration.

3. Agenta: The Open-Source Platform for Robust LLM Operations

Agenta is an open-source LLMOps platform designed to give developers and product teams a comprehensive suite of tools for building reliable AI applications. It integrates prompt engineering, versioning, evaluation, and observability into a single, self-hostable platform, offering a powerful alternative to proprietary systems.

One of Agenta's core philosophies is to accelerate the development cycle by bridging the gap between developers and non-developers. It provides the tools to rapidly experiment, evaluate, and deploy LLM-powered features with confidence.

Key Features and Concepts:

Who Should Use Agenta?

Agenta is an excellent choice for startups and enterprises that want to build robust, production-grade LLM applications while maintaining control over their tools and infrastructure.

Its open-source nature appeals to teams that prioritize customization and want a comprehensive LLMOps solution without relying on a third-party service.

4. PromptBase: The Marketplace for Buying and Selling Prompts

PromptBase takes a unique, market-driven approach to prompt engineering. It is an online marketplace where users can buy and sell high-quality prompts for a variety of generative AI models, including DALL-E, Midjourney, and GPT models. This creates a vibrant ecosystem for both expert prompt creators and those looking for ready-made solutions.

For prompt creators, it offers a way to monetize their skills. For users, it provides instant access to a vast library of tested prompts that can save significant time and effort in achieving desired results.

Key Features and Concepts:

Who Should Use PromptBase?

PromptBase is ideal for individuals, freelancers, and small businesses who need high-quality results from generative AI without wanting to invest heavily in learning the intricacies of prompt engineering themselves.

It's also a valuable platform for expert prompt engineers looking to monetize their expertise. However, users should be aware that the quality of prompts can vary, and there is no guarantee of effectiveness for every use case.

5. AIPRM: The Ultimate Prompt Library for ChatGPT

AIPRM is a widely popular prompt management tool, best known for its Chrome extension that supercharges the ChatGPT interface. It embeds a massive, community-curated library of prompts directly into ChatGPT, allowing users to execute complex tasks with a single click.

It simplifies the prompt creation process by offering thousands of pre-built prompts categorized by profession and task, such as SEO, marketing, software development, and copywriting. This makes it incredibly accessible for users of all skill levels.

Key Features and Concepts:

Who Should Use AIPRM?

AIPRM is perfect for marketers, content creators, SEO specialists, and anyone who uses ChatGPT extensively in their daily workflow.

Its ease of use and the sheer volume of ready-made prompts make it an invaluable tool for boosting productivity and generating high-quality content without needing deep technical knowledge of prompt engineering.

6. OpenPrompt: The Researcher's Toolkit for Prompt-Based Learning

OpenPrompt is an open-source framework designed specifically to facilitate prompt-based learning for researchers and developers working with LLMs. It provides a unified and user-friendly framework for constructing, testing, and deploying prompts across a wide variety of models and natural language processing (NLP) tasks.

Unlike more application-focused tools, OpenPrompt is geared towards experimentation and simplifying the research process in the field of prompt engineering.

Key Features and Concepts:

Who Should Use OpenPrompt?

OpenPrompt is best suited for AI researchers and NLP developers who are actively experimenting with prompt-based learning and fine-tuning models.

Its structured yet flexible environment is ideal for academic research and for developers who want to explore the fundamental mechanics of how prompts influence model behavior.

⚠️ It does, however, require a certain level of technical expertise to use effectively.

Technical Deep-Dive – Real-World Prompt Engineering Workflow

If you’re not writing code yourself, jump ahead to “Select the Best AI Prompt Engineering Tool.

Now that we've explored each tool's unique strengths, let's see how they work together in practice. Here's a complete workflow that demonstrates how these platforms complement each other from initial concept to production deployment:

Use-case example building an AI assistant that turns raw customer-support chats into a polite email reply.

STAGE 1 Draft ⟶ “good first prompt”

- AIPRM – Open ChatGPT, browse the “E-mail Reply” prompt template, click ➕ to load it, then tweak wording for tone and length.

- PromptBase – Search “customer support summary”; buy a £2 proven prompt or just study its structure for inspiration.

- OpenPrompt – In your notebook:

python

from openprompt import PromptTemplate

template = PromptTemplate(

text="Rewrite the following chat as a courteous email:\n{chat}\n---\nEmail:",

verbalizer_dict=None)You now have a reproducible, code-level prompt object you can version-control.

STAGE 2 Test ⟶ run on sample data, capture outputs

- LangChain – Feed 100 anonymised chat logs through the prompt:

python

from langchain.chains import LLMChain

chain = LLMChain(prompt=template, llm=openai_llm)

responses = chain.apply(dataset) # returns list of emails- PromptLayer – Auto-logs every API call; you get a dashboard of prompts, temperature, latency, cost, and full outputs.

- Agenta – Import the same dataset, create Experiment #1 (original prompt). Add Experiment #2 (shorter tone), hit “Evaluate”. Agenta scores each run with BLEU + a custom “politeness” metric you supply.

STAGE 3 Refine ⟶ iterate & A/B test variants

- Examine PromptLayer logs; notice long emails >250 words.

- OpenPrompt – Add a length guard:

python

template.text += "\n(Keep it under 180 words.)"- Agenta – Re-run evaluation; new variant reduces word count by 27%, politeness unchanged → mark as winner.

- AIPRM – Pull a community-voted prompt that injects empathy phrases; blend the best parts into your template.

- PromptBase – Optionally list your refined prompt for peer feedback (and passive income).

STAGE 4 Deploy ⟶ ship to production & monitor

- LangChain – Wrap the winning prompt in a FastAPI endpoint:

python

app.post("/email")(lambda chat: chain.run({"chat": chat}))- Agenta – Flip the experiment to “Production”; now routes real traffic while keeping rollback ability.

- PromptLayer – Continues to log live calls; set an alert if average response quality score < 0.8.

- OpenPrompt – Keeps the prompt as a class inside your repo, so CI/CD pushes the exact versioned template.

- PromptBase – Publish “Customer-Support-to-Email v1.0” for marketing or team sharing.

Result: Each tool slots naturally into one or more steps of the draft → test → refine → deploy lifecycle, giving you a repeatable, data-driven workflow instead of guess-and-check prompting.

More from AICurator:

Select the Best AI Prompt Engineering Tool for Your Business

The key to excelling in AI is moving from simple prompt crafting to disciplined prompt engineering. The right AI prompt engineering tools turn basic interactions into powerful, reliable applications. Whether your goal is development, collaboration, or research, having a dedicated platform is now essential for consistent results and effortless scale.

The next move is yours. We encourage you to explore these options, find the one that fits your project, and start building more sophisticated AI solutions. What will you create next?