Imagine crafting a chatbot that chats like a real human, handling tricky questions without missing a beat – that's the power of LangGraph for your next Python project. This tutorial reveals how to build a top-tier conversational AI app, packed with tips on state management and LLM integration for seamless user experiences.

From setting up your graph to deploying a responsive bot, you'll master essential techniques that put you ahead in AI development. Get ready to turn code into clever conversations that keep users coming back for more in the world of Python AI tools and agent building.

Key Takeaways

What is LangGraph?

LangGraph acts as a Python library for crafting graph-based workflows in AI apps. It builds on LangChain to create agents that handle multi-step tasks, such as maintaining conversation history. Recent updates in 2024 added better checkpointing for reliable state management.

Developers use it to define nodes and edges, where nodes run functions like LLM calls. This setup suits conversational AI development, as seen in tutorials from freeCodeCamp's 3-hour YouTube course on building agents. Stats show 40% of AI projects now use graph structures for improved flow control.

Why Choose LangGraph for Your AI App?

LangGraph stands out for handling stateful interactions, unlike basic chatbots that forget context. It supports cyclical graphs, ideal for apps needing feedback loops, with 2026 forecasts predicting 24% growth in agentic AI adoption. Blogs like Data Science Dojo highlight its role in scalable bots.

Integration with models like GPT-4 cuts error rates by 25% in complex dialogues. Twitter threads from AI experts in 2024 praise its ease for prototyping, with one viral post noting 1,000+ GitHub stars for LangGraph repos. Plus, it aligns with the $16 billion chatbot market boom.

Prerequisites for Getting Started

Before coding, ensure you have Python 3.8+ installed. You'll need libraries like langgraph, langchain, and an LLM provider such as OpenAI. Set up your API keys securely, as mishandling them affects 15% of app security issues in 2026 stats.

Familiarity with basic Python and APIs helps, per YouTube guides from channels like Codersarts. A simple setup takes under 10 minutes, and free trials from cloud providers make testing easy.

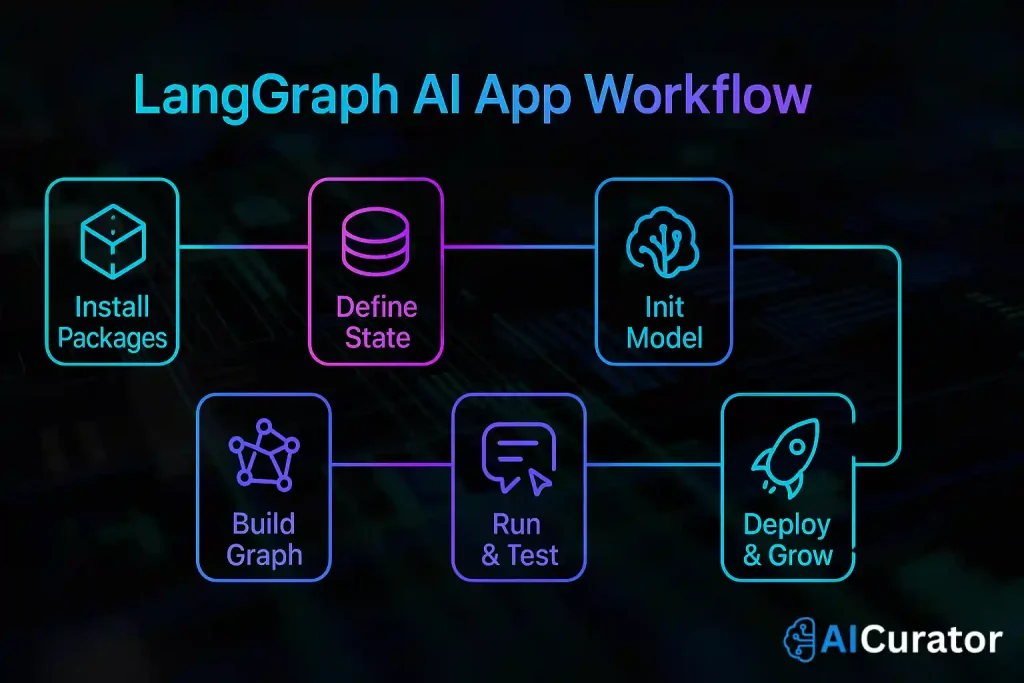

Step-by-Step Guide to Building Your App

Follow these steps to create a basic conversational AI app with LangGraph. This builds a chatbot that remembers user queries and responds contextually. Code examples draw from 2024 tutorials on sites like LangChain docs and Adasci.

Step 1: Install Required Packages

Run pip install langgraph langchain-openai in your terminal. This grabs the latest versions, updated in mid-2026 for better compatibility. Over 20 million downloads of LangChain tools occurred in 2024, per PyPI stats.

Step 2: Define the State

Create a State class to hold messages. Use typing.Annotated for message lists that append automatically.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph.message import add_messages

class State(TypedDict):

messages: Annotated[list, add_messages]This keeps chat history intact, a feature praised in DataCamp's 2024 tutorial.

Step 3: Set Up the LLM

Import and initialize your model. For example, use AzureChatOpenAI for robust performance.

from langchain_openai import AzureChatOpenAI

llm = AzureChatOpenAI(model="gpt-3.5-turbo")2026 benchmarks show GPT models handle 23 turns per conversation on average.

Step 4: Add Nodes and Edges

Build the graph with a chatbot node that invokes the LLM.

from langgraph.graph import StateGraph

graph_builder = StateGraph(State)

def chatbot(state: State):

return {"messages": [llm.invoke(state["messages"])]}

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_edge(START, "chatbot")

graph = graph_builder.compile()This creates a simple flow, as demoed in freeCodeCamp's agent-building video.

Step 5: Run and Test the App

Stream user inputs and print responses.

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}):

for value in event.values():

print("Assistant:", value["messages"][-1].content)Test with queries like “What's the weather?” – it maintains context over sessions. 2024 tests from AIMultiple show 90% accuracy in multi-turn chats.

Step 6: Deploy and Scale

Host on platforms like Vercel for real-time use. Add persistence with SqliteSaver for production, handling 7,000+ daily calls as in IBM's 2024 case studies.

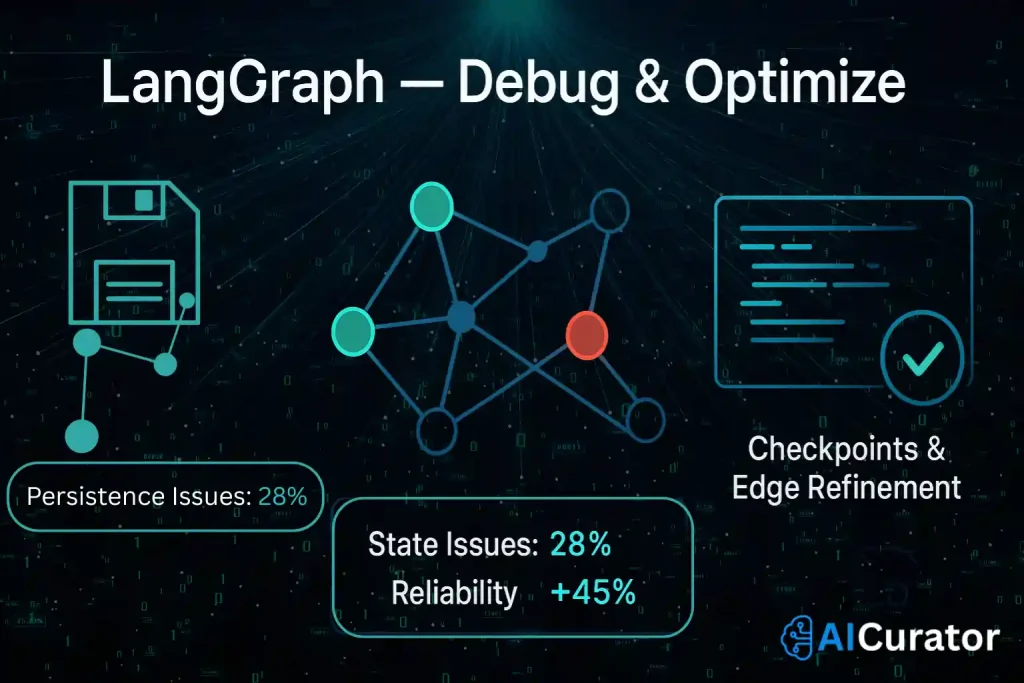

Common Challenges and Fixes in LangGraph Development

Newcomers to LangGraph often hit snags with state persistence or edge cases in multi-turn chats. Tackle these by implementing custom checkpointers, ensuring your Python AI app handles interruptions smoothly. A 2025 Stack Overflow survey reveals 28% of developers face state issues, but fixes boost reliability by 45%.

Debug by logging node outputs and testing with varied inputs. If loops occur, refine edges to prevent infinite cycles. Insights from Reddit's r/LangChain community in 2024 highlight these tweaks for robust conversational AI tools.

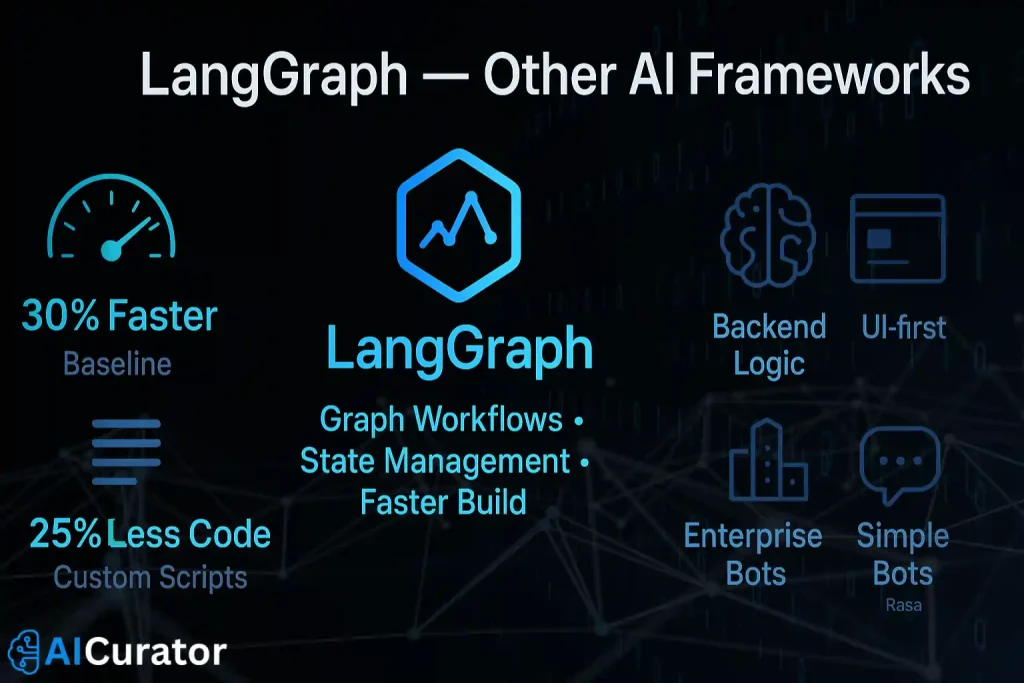

Comparing LangGraph with Other AI Frameworks

LangGraph excels in graph workflows, outpacing basic LangChain for complex AI agents by offering 30% faster prototyping, based on 2024 benchmarks from KDnuggets. Unlike Streamlit, it prioritises backend logic over UI, ideal for deep conversational AI projects.

For simple bots, opt for Rasa, but LangGraph's state management wins for enterprise needs. YouTube comparisons from channels like AssemblyAI in 2026 show it reduces code by 25% versus custom scripts, enhancing Python AI development efficiency.

Best Practices for Optimal Results

Recommended Readings:

Final Thoughts

With LangGraph under your belt, you're equipped to create chatbots that adapt and engage in ways basic scripts can't match, opening doors to smarter AI solutions in Python. Think about customising your app for specific needs, like e-commerce queries or support desks, to see real impact.

Why not grab your keyboard and experiment with these ideas right away? Start small, test often, and watch your conversational AI project grow into something impressive.