Artificial intelligence (AI) systems are increasingly relied upon to classify text—be it movie reviews, financial advice, or medical information. As these text classifiers become more embedded in everyday applications, from chatbots to content moderation, ensuring their accuracy and reliability has never been more crucial.

A new method developed by researchers at MIT’s Laboratory for Information and Decision Systems (LIDS) offers a fresh, effective way to test and improve the performance of AI text classifiers, cutting vulnerabilities and enhancing robustness.

Key Takeaways

Why Accurate Text Classification Matters More Than Ever

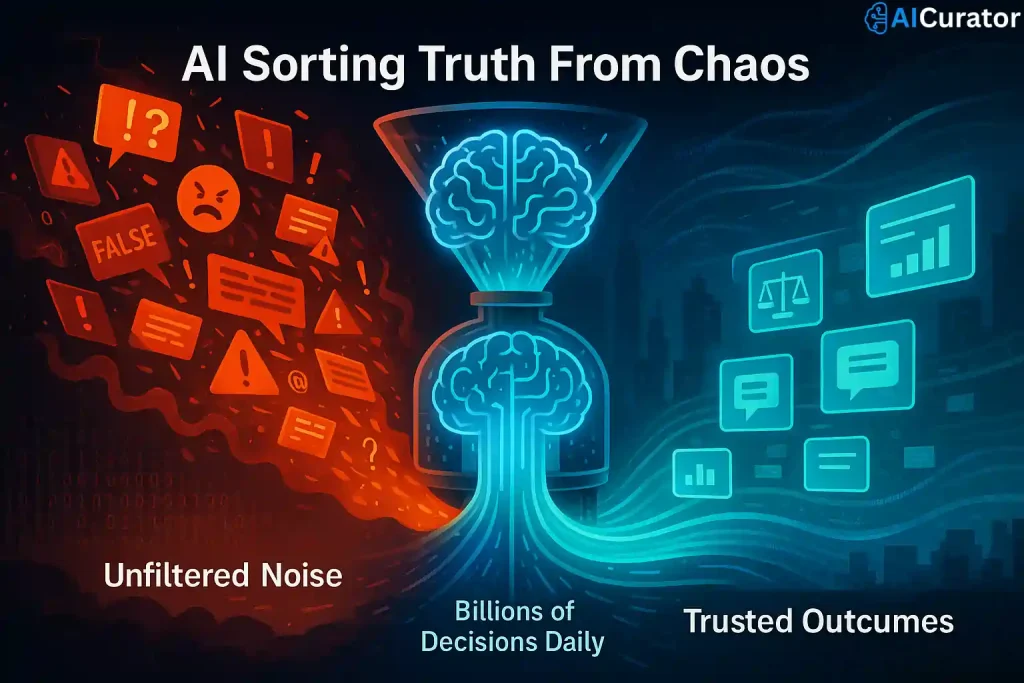

AI-driven text classification shapes how users interact with digital services—including filtering misinformation, offering financial guidance, and understanding customer intent. Mistakes in classification can cause harmful misinformation, biased decisions, or legal liabilities.

As AI systems engage billions of text interactions, even minor accuracy improvements translate into millions of avoided errors.

Challenges in Testing AI Text Classifiers: The Role of Adversarial Examples

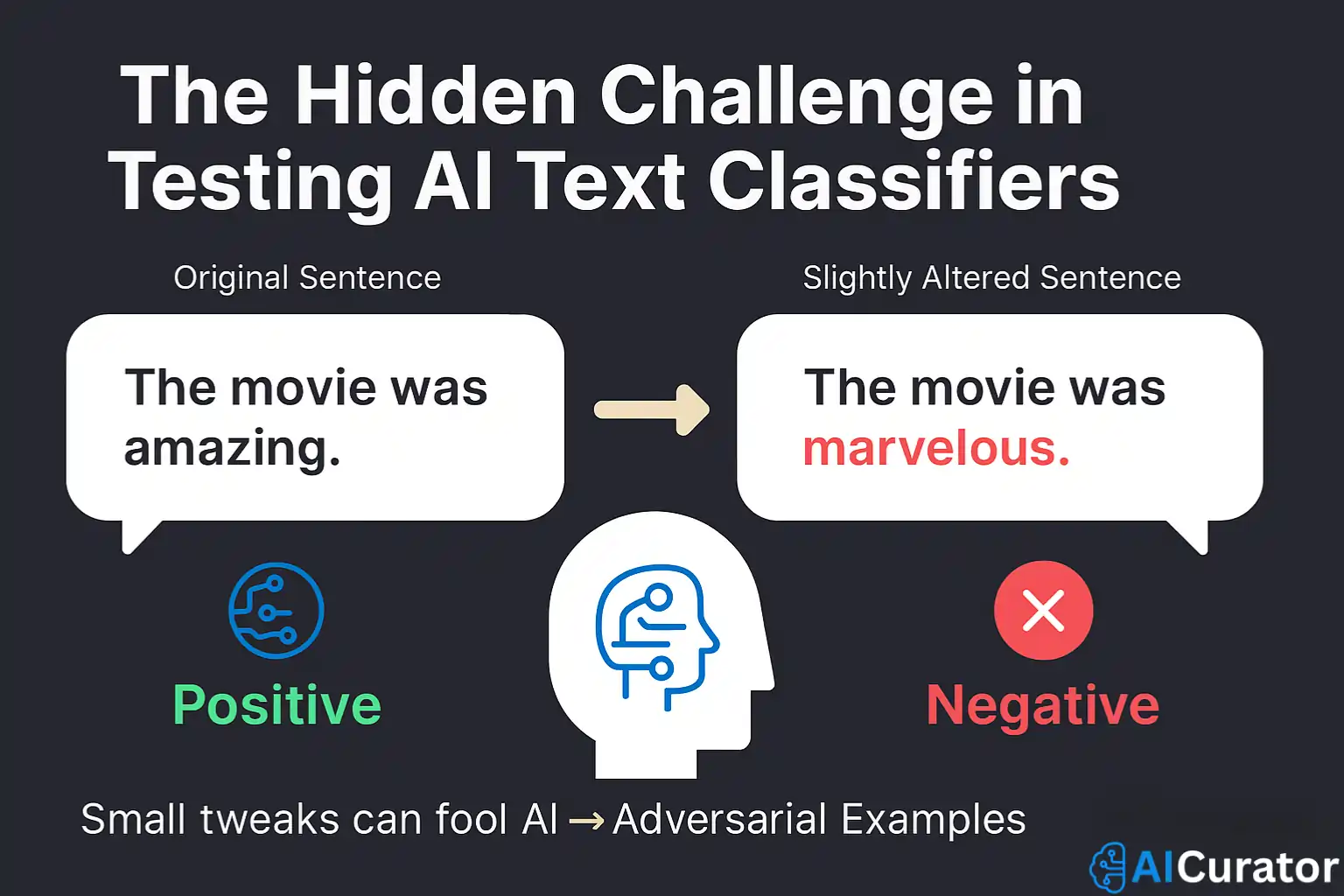

Traditional testing methods generate synthetic sentences by tweaking words slightly, often unintentionally fooling AI classifiers into mislabelling.

For example, a sentence classified as positive might be misclassified as negative after a subtle synonym swap, even though the meaning remains unchanged. Detecting these “adversarial examples” is key to stress-testing AI models.

Real-World Applications and Impact

Banks can ensure chatbots don’t inadvertently dispense financial advice they’re not authorised to give. Medical information platforms can better filter misinformation.

Content moderation can more reliably detect hate speech or false content. The method’s adaptability suits any domain relying on text classification.

MIT’s Innovative Solution: Precision Targeting of Powerful Words

The LIDS team’s method hinges on leveraging LLMs to:

Such laser-focused analysis drastically narrows the search space for adversarial sentences, reducing computational demands while increasing testing effectiveness.

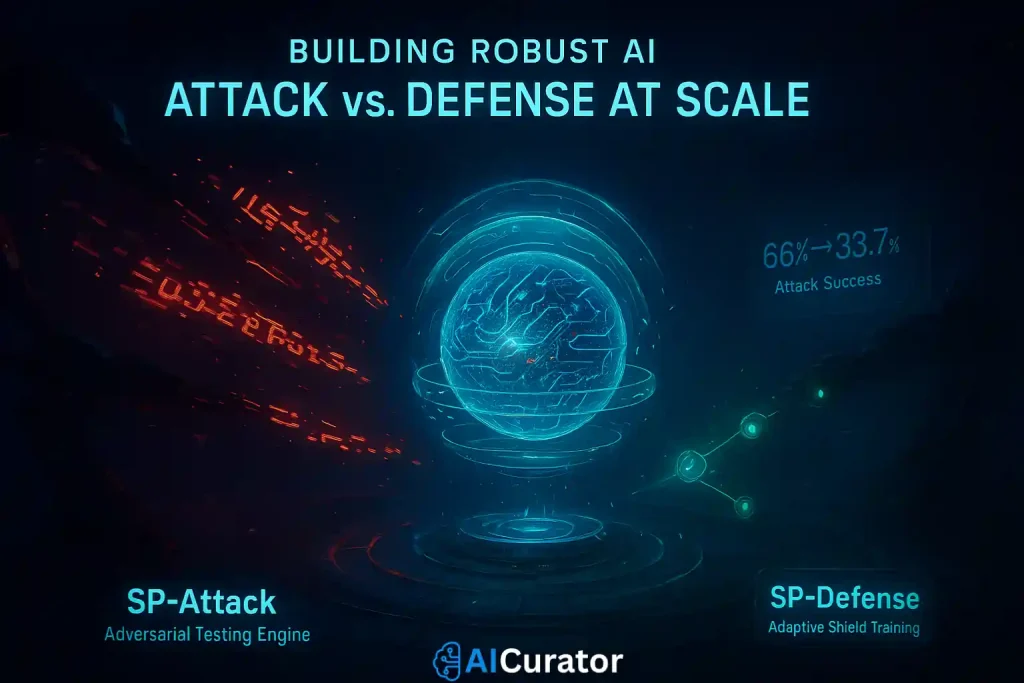

Robustness Improvement: SP-Attack and SP-Defense

The researchers offer two open-source tools:

Tests show the framework almost halved the attack success rate in some scenarios—from 66% to 33.7%. Even small gains are significant at scale, given billions of interactions.

Broader Context: Advancements in AI Text Classification

Further developments in transformer-based models like BERT and ALBERT have improved text classification accuracy beyond 95%.

Yet, vulnerability to adversarial attacks remains a critical concern, motivating ongoing research into evaluation methods that combine semantic understanding with adversarial robustness.

Recommended Readings:

Looking Ahead

This breakthrough testing method arms developers with a simple yet powerful tool: focus on a select few words to bolster AI accuracy across industries. By weaving adversarial checks into training, teams can harden chatbots, content filters and analysis engines against sneaky errors.

Ready to see your AI systems perform flawlessly? Give this open-source toolkit a spin and tighten up your text classifiers today!