Open up the next level of AI performance by shifting from prompt engineering to advanced context engineering. This discipline is crucial for optimizing large language models (LLMs), focusing on total AI context management rather than single queries.

Integrating techniques like retrieval-augmented generation (RAG), semantic search, and effective knowledge graph use expands the LLM context window and enhances AI agent memory.

Mastering these skills allows for the creation of highly intelligent, scalable, and efficient AI workflows that deliver superior, context-aware results.

Key Takeaways

Why Context Engineering Matters Now

- Several converging factors have made context engineering essential for modern AI applications.

- Rising User Expectations mean users now expect AI systems to understand context like knowledgeable human assistants, remembering previous conversations and accessing relevant information without explicit instruction.

- Increased Application Complexity sees modern AI agents handling multi-step workflows, making complex decisions, and interacting with multiple systems – capabilities that require sophisticated context management.

- Higher Stakes for Accuracy occur as AI handles critical business functions like customer service, financial analysis, and healthcare support, where the cost of failure has increased dramatically.

Prompt Engineering vs Context Engineering

| Element | Prompt Engineering | Context Engineering |

|---|---|---|

| Goal | Craft one clear instruction | Assemble the right information, tools and memories for every step |

| Scope | Single interaction | Whole system: system messages, RAG, tool specs, user history |

| Memory | Stateless | Short-term chat + long-term preferences + external stores |

| Typical Use | Quick content generation | Agents, chatbots, code companions, enterprise search |

| KPI | Output quality of that turn | Accuracy, latency, cost per call, user satisfaction |

The Four Pillars of Context Engineering

1. Writing Context: Capturing Information

Context writing involves helping AI agents capture and store relevant information for future use. This process is similar to a human taking notes whilst solving a problem, ensuring critical details aren't lost between interactions.

Implementation examples include Scratchpads for real-time recording of user preferences and conversation details, Memory Systems for persistent storage of important facts and patterns, and Session Logging for comprehensive tracking of interaction history.

2. Selecting Context: Intelligent Filtering

The ability to retrieve the right information at the right time is crucial for effective context engineering. Systems must intelligently filter vast amounts of available data to present only the most relevant context.

Key strategies include Relevance Scoring through algorithms that rank information based on current task requirements, Temporal Filtering that prioritises recent information whilst maintaining access to historical context, and User-Specific Filtering that customises context based on individual user profiles and preferences.

3. Compressing Context: Managing Token Limits

When conversations exceed LLM context windows, effective compression becomes essential. This involves reducing information volume whilst preserving critical details.

Compression techniques encompass Summarisation for condensing lengthy conversations into key points, Hierarchical Storage for organising information by importance and recency, and Selective Retention for maintaining only the most relevant historical context.

4. Isolating Context: Modular Design

Context isolation involves breaking down information into specialised components, allowing different agents or systems to focus on specific aspects without overwhelming the main context window.

Isolation strategies include Sub-Agent Architecture with specialised agents handling specific context domains, Sandboxed Environments providing isolated execution spaces for sensitive operations, and Modular Context Windows creating separate context spaces for different types of information.

Building a Context-Smart Workflow

Step 1: Define Objectives & Metrics

Pick concrete KPIs such as F1-score, average response time and human review pass-rate.

Step 2: Map Information Sources

Step 3: Design the Context Stack

Order matters. A proven template:

- System message (role, style, safety rules)

- Tool list with function signatures

- Retrieved facts (top-k search or SQL result)

- Short-term memory summary

- User prompt

Step 4: Automate Retrieval & Pruning

Use vector search to grab top-scoring chunks, then trim with a scoring threshold to avoid noisy tokens.

Step 5: Monitor & Iterate

Log every LLM call with its assembled context. A-B test different retrieval cut-offs or compression rates to spot sweet-spots for cost vs quality.

Best Practices From the Field

Advanced Context Engineering Techniques

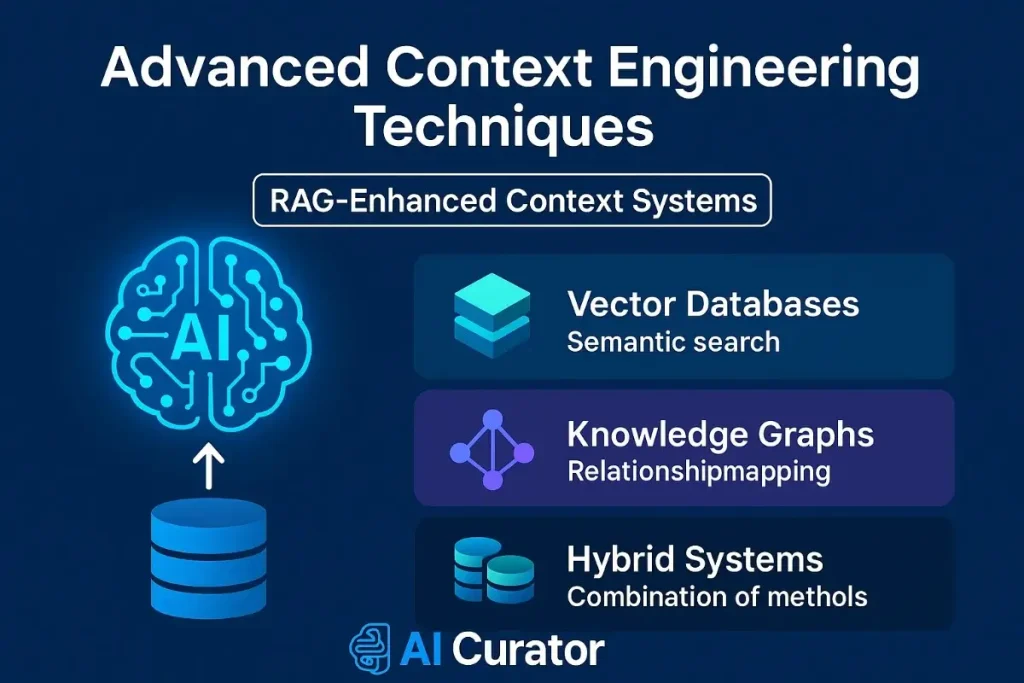

RAG-Enhanced Context Systems

Retrieval-Augmented Generation (RAG) has become a cornerstone of modern context engineering. These systems dynamically retrieve relevant information from vector databases, knowledge graphs, and external APIs to enhance AI responses.

Popular RAG implementations include Vector Databases like Pinecone, Weaviate, and Chroma for semantic search, Knowledge Graphs such as Neo4j and Amazon Neptune for relationship mapping, and Hybrid Systems that combine multiple retrieval methods for comprehensive context.

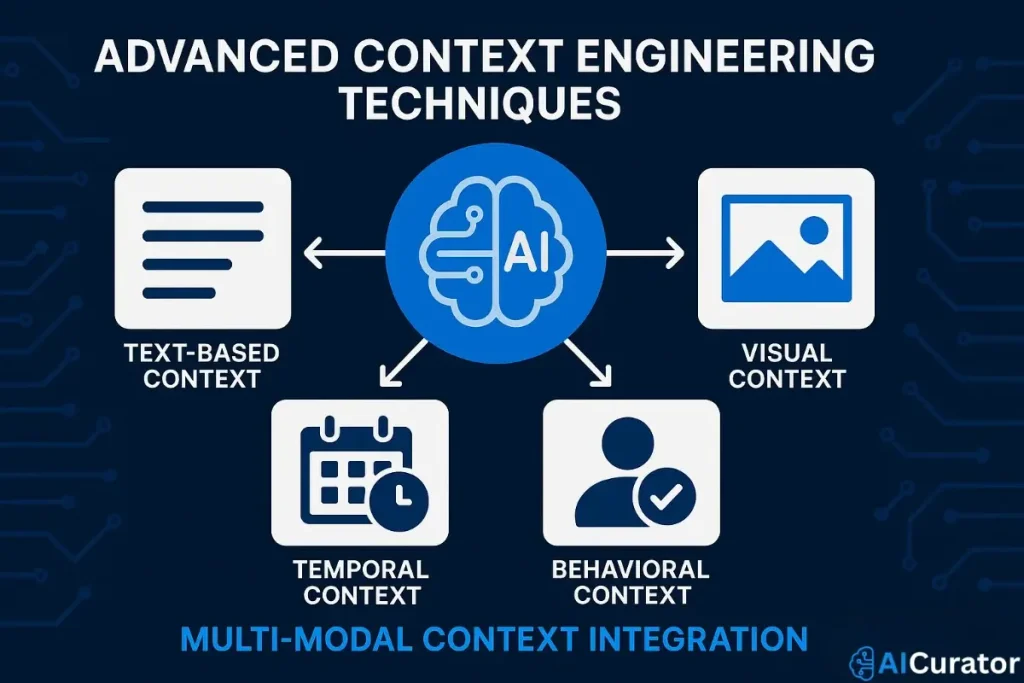

Multi-Modal Context Integration

Advanced context engineering now encompasses multiple data types simultaneously. This includes Text-Based Context from documents, conversations, and structured data, Visual Context from images, charts, and multimedia content, Temporal Context from time-series data and scheduling information, and Behavioural Context from user interaction patterns and preferences.

Real-Time Context Optimisation

Modern systems provide instant feedback on context effectiveness, allowing for dynamic adjustments during conversations. This includes Performance Monitoring for tracking response quality and user satisfaction, Adaptive Filtering that adjusts context relevance based on interaction patterns, and Predictive Context that anticipates information needs before they're expressed.

Final Thoughts on Context Engineering

Master context engineering to unlock peak AI performance in 2025. This approach boosts LLM efficiency through smart data retrieval and memory systems, key for AI workflow automation. Integrate vector databases and semantic search for precise, real-time insights that drive machine learning success.

Explore AI agent tools and prompt tuning strategies to enhance model accuracy. Build scalable systems that adapt to user needs, setting new standards in artificial intelligence applications. Stay sharp—context mastery fuels innovation in AI development.